When one turns to the magnificent edifice of the physical sciences, and sees how it was reared; what thousands of disinterested and moral lives of men lie buried in its mere foundations; what patience and postponement, what choking down of preference,… how absolutely impersonal it stands in its vast augustness—then how besotted and contemptible seems every sentimentalist who comes blowing his smoke-wreaths, and pretending to decide things from out of his private dream!

—William James (1897)

When I was young, the continents did not move. Well, the continents were moving, all right, only nobody knew that they were. Geologists said the continents did not move, and that meant that nonscientists could not possibly know otherwise. Then, partly because of data collected in the International Geophysical Year (1957–1958), it was discovered that the continents do move after all. So, as is well known to everyone today, even small children, the continents move. But before this knowledge became an official part of science, nobody could know this.

It is not as though nobody thought that the continents moved before then. Alfred Wegener (1880–1930) in particular had argued from 1915 on for what was then called continental drift or mobilism. His arguments, as we can see now, were cogent and powerful: The geological formations of the continents on east and west sides of the Atlantic Ocean match up just as if they had been torn apart at some time in the past, like two halves of a torn dollar bill. But Wegener did not have a mechanism to explain continental motion. Plate tectonics provided that mechanism: The continents move on currents of molten rock in the underlying mantle. Once the scientific community in charge of this matter had concluded that this is how it all worked, then everyone, even children (including me at the time) could then know it.

Science defines what is socially accepted as knowledge of the natural world, and knowledge itself is not the domain of any individual but of humankind as a whole. We rely on the ears and eyes and brains of countless other human beings (many of them now long dead) in order that we may know about Antarctica, bacteria, or dinosaurs. No one among us could possible gather the mountains of evidence and think through the mountains of theory that support even the most common scientific facts taught to our children in school. We must rely on scientific authority—there is no other way. Science is often portrayed as a body of knowledge that rests solely and solidly on the facts themselves, but the fact is that nearly all the science that any one person knows, including scientists, rests on authority. Fortunately, scientists have created a complex hierarchy of scientific authorities to protect the purity of science. Like all things human, it is not perfect. Nevertheless, this modern priesthood of knowledge does work, and is responsible for the wonders of the modern world.

As a youngster I loved science. I loved dinosaurs, atoms, chemicals, radiation, rockets, the solar system, and the myriad of little stars that appeared around the Pleiades when I looked at them through my small reflector telescope. I loved the certainty of science and the thrill of looking through the veil of appearance directly at the underlying reality. I believed in science. My faith was not shaken when Wegener’s theory of continental drift (as it was then called) went from be- ing false to being true. As I saw it, science had searched for new evidence, had found it, and had done the right thing by changing its mind. My faith in science was unshaken.

Then I ran into Einstein’s theory of relativity. That was something else altogether, for when his theory became true it changed the foundations of physical science it- self. As for so many people, Newton’s laws made perfect sense to me. Like many physicists, I felt the truth of Newton’s laws in my guts every time I rode a merry- go-round. But Einstein’s theory required enormous subtlety even to imagine. I couldn’t imagine it, actually, but I took it on authority that Einstein was right, and tried harder to grasp his vision. Around the time I entered university I was reading one of Einstein’s own introductions for the nonphysicist, The Meaning of Relativity (1950), when I suddenly realized what was holding me back: I kept thinking in Newtonian terms, by gut instinct, so to speak. I kept trying to translate relativity into Newtonian physics—but the translation was impossible. Relativity only made sense if, gut instincts notwithstanding, Newton was wrong.

The scales fell from my eyes, and I began to see what Einstein was saying. When you glimpsed the world through Einstein’s theory, it was obvious that Newton was wrong. But if Newton was wrong—and surely he was, even if he was wrong only by a little bit when it came to everyday objects and speeds—then Newton’s laws had never been proven in the first place. What is proven cannot turn out to be false. My faith in scientific authority was shaken. It seemed to me that even when we think science is looking through the veil of appearances, it may just be looking at another set of appearances. My studies of quantum mechanics over the next few years tended to confirm that opinion. We can never transcend ourselves, so we can never leave appearance entirely behind. There is no such thing as scientific proof. But if there is no scientific proof, how does science get to tell us what we can or cannot know? Searching for the answer to that question led me to a career in the philosophy of science, and eventually to environmental science and this book.

In this chapter we have a look at pure environmental science, or environmental science as such, as philosophers say. If we were studying basketball, for example, basketball as such would be defined by the rules of the game. We would discover in those rules that the very idea of basketball is that of a noncontact sport, for that is what the rules say and what the various sorts of fouls and penalties are designed to create. The rules create the game. Knowing what pure basketball is, we would probably be surprised when we have a look at professional basketball, for it involves lots of contact, with huge players shoving and pushing each other right under the noses of the officials, no fouls called. Actual basketball turns out to be an imperfect realization of pure basketball, with its own special problems, solutions, and (dare I say it?) beauty. The same is true for other human endeavors, and science is no exception.

As we saw in Chapter 5, actual environmental science is science applied to the promotion of environmental health. It is not value neutral; it does not seek truth for the sake of truth; it moralizes; it is trying to reshape human life itself into a form that is better for the environment (where, as noted previously, environment stands for all of nature except humankind and its works). It has, to put it bluntly, many of the properties of the sociopolitical ideologies that have gripped our species periodically. In this context it is important not to take our eye off the ball, as it were. If ever there was a time when we needed pure (or at least purer) environmental science, it is now. Since we cannot find something until we know what we are looking for, we need to get a better idea of just what environmental science would be if it were closer to its ideal form. That is our goal in this chapter. We begin by considering science as such. Like most human enterprises, science evolves and its rules are revised as its practitioners deem necessary. So we will start with a bit of science history.

Some readers may find this chapter rather dry and scholarly, which is not surprising, perhaps, since it deals with my own academic specialty, the philosophy of science. Its main ideas are quite simple, and to many people quite obvious: first, that there is no such thing as scientific proof (as the histories of Wegener and Einstein show us), and second, that science cannot tell us what we ought to do, what is right or wrong, what is ethical—in short, has no authority over values. So if you find yourself bogging down in the scholarly technicalities of this discussion, please jump ahead to Case Study 6 and Theses 7 and 8. One main function of this chapter is to prepare us for Case Study 7, which is an extended study of global warming theory. The importance of the global warming issue for the human species and for the rest of nature is enormous. It is the issue that will define the future of environmentalism and environmental science for generations to come.

There is, however, no way to come to grips with global warming except through the scientific technicalities involved. To a large extent these technicalities can be rendered intelligible to nonspecialists, and that is what I am attempting here. Indeed, it is important that as many ordinary civilians among us do this as possible. I urge all readers to read as much of Case Study 7 as they comfortably can. But I also realize that scientific technicalities simply are not some people’s cup of tea, and they should feel free to jump ahead to Chapter 7, which, by way of preview, is about our sense of the sacredness of nature, which is at least as important as (and much more permanent than) the issue of global warming. It is as if our philosophical expedition has a planned ascent of a substantial mountain peak, the mountain of science that is involved in the issue of global warming, as its centerpiece. Ropes and crampons will be used along with other technical aids, to ensure that those who are really determined can make it to the top. However, those who are not interested in such mountaineering are welcome to sit out this part of the expedition.

6.1 THE RISE AND FALL OF SCIENTIFIC PROOF

Before modern science, anyone who wanted to know what kept the Sun and Moon up in the sky, or how human beings came into existence, or whether people from other cultures were trustworthy, would ask a priest. This was not just a peculiarity of Western Europe, where modern science would first begin to flourish, but a more general phenomenon. As we trace cultures back in time we find that religion and science are not at first distinct. The persons who speak authoritatively about matters of fact are the same ones who speak authoritatively about God or the gods. Starting roughly with Copernicus (1473–1543) and Galileo (1564–1642), science began to assert itself against the authority of religion. These scientists said the Sun, not the Earth, was the center of the universe. Copernicus warily published his theory on his deathbed to avoid repercussions from the Church, but Galileo boldly published his ideas despite the dangers, was arrested on suspicion of heresy, and was confined to house arrest. Matters might have ended there, but on Christmas day of the year Galileo died, Newton (1642–1727) was born. He devised the physics, and with it described the mechanism, that explained how the planets go around the Sun. Thanks to Newton, science won a decisive victory. Over the next two centuries, science went from one stunning success to another in the enterprise of understanding nature.

Gradually, the scientist in lab whites replaced the priest in black robes as the authority on what keeps the Sun and Moon up in the sky, or how human beings came into existence, and whether people from other cultures are trustworthy. Newton thought that scientific knowledge was literally proven, in the fullest sense of the word, as exemplified in mathematics. In mathematics we can prove, for example, that any triangle with sides of equal length (an equilateral triangle) also has angles of equal size. This sort of proof is the strongest possible: We cannot even conceive or imagine a triangle with equal sides that does not have equal angles as well. Newton’s epoch-making system of physics was set out in his book, Philosophiae Naturalis Principia Mathematica (The Mathematical Principles of Natural Philosophy1). Newton presents his new philosophy as though it were pure geometry, as a series of proofs of theorems from basic axioms.2 Newton’s famous three laws are presented by him as axioms, or self-evident truths, not as the result of observation or measurement. That is not only different from what scientists are now taught, but opposed to it.

David Hume (1711–1776) showed by a series of ingenious arguments that no universal claims about nature, such as Newton’s laws, could ever be proven. One way to see this is to observe that even though every massive body that we see attracts other masses via gravitation, this does not prove that those we do not see also attract each other.3 Science, in other words, involves its own species of faith: faith in the uniformity of nature. Science demands that we believe that the things which we do not see are precisely like those that we do see. But faith is not proof. For a century or so Hume’s arguments were ignored by scientists as they extended Newton’s scientific foundations to include chemistry, electricity, electromagnetism, and biology. Ernst Mach4 (1838–1916) was, however, a notable exception. Mach took Hume’s problem very seriously, so seriously that he analyzed physics methodically to identify its articles of faith. Two of the articles of faith he discovered were absolute space and absolute time. His most famous student, Albert Einstein (1879–1955), went on to develop a new physics that rejected the Newtonian metaphysical concepts of absolute space and time in favor of relativistic space and time. As we all know, Einstein’s theory of relativity was an absolute success, with countless confirmations by observation. Perhaps the most stunning confirmation is the nuclear explosion, which confirms the equivalence of mass and energy denoted by his famous equation E = mc².

What we do not usually realize—indeed what we do not like to admit—is that Einstein’s physics disproved Newton’s. It also proved, therefore, that science is fallible. Science not only makes small superficial mistakes, but also big foundational mistakes about the fundamental nature of space, time, matter, and energy. Ironically, just as scientists abandoned the idea of scientific proof in the last century, society at large simultaneously embraced the notion as they increasingly turned toward science and away from religion and tradition for knowledge and understanding. Thus, we now find ourselves in an age when the concept of scientific proof is still accepted by people in general, even though scientists themselves gave up on this idea early in the twentieth century—at least officially.

6.2 THE RISE OF MODELING

The assimilation of this historical lesson is very clearly manifested in the view, which is nearly universal among scientists today, that the business of science is to model natural phenomena. A model is an abstract representational structure, typically constructed in mathematical terms, that is sufficiently accurate to enable us to predict and possibly control the phenomenon it represents. We are all familiar with model airplanes, model automobiles, and model ships. Like these models, scientific models include representations of the specific properties of the things modeled, but unlike them they are not physical objects themselves, but abstract structures. For example, a mathematical formula may model an orbit, a pendulum, or soil erosion. Just as a model airplane will not have all of the properties of a real airplane (its tires may not be made of rubber, its motor may not work, it may not fly), so, too, a scientific model will—in fact, must—omit some of the properties of the system. The volume of an orbiting object may be omitted so it can be represented as a point mass, friction may be omitted in the pendulum, no location may be given in a model of eroding soil, and so on. Simplification is of the essence in modeling: The right things must be left out so that the essential things can be included.

The general use by scientists of the concept of modeling to describe their work is an extremely important indicator of a big shift in thinking in the scientific community since the time of Newton: The product of science is not the universal law, but models of aspects of parts of reality (such as the crystalline aspect of salt). Fortunately, maps are a familiar type of model and can be used to illustrate two important points. First, like maps, models are neither proven nor disproven. Instead, they are useful or useless, detailed or simple, accurate or inaccurate. Second, like maps, models require simplification in order to be constructed in the first place. Just as no one expects to find actual houses on a street map, or actual water in a map of the Pacific Ocean, so scientists do not expect their models to capture every aspect of the things they model. The purpose of a model is the same as the purpose of a map: to help us find our way around. What information is contained in a map—and what is left out—depends on what bit of the world we want to navigate. The water department’s map of the same streets as those shown in the driver’s road map will show where the water mains are located, whereas the politician’s map will show the electoral districts instead. In the same way, different sorts of scientists provide different sorts of models, employing different sorts of abstraction, to serve their different sorts of interests. The geologist’s models will ignore fauna and flora to show the rock formations; the biologist’s model will ignore the rock formations to show the fauna and flora.

Scientists’ self-conscious recognition of what they are doing is reinforced by the use of computer models. No one expects that a computer model of photosynthesis will yield any actual carbohydrates or that a meteorological model of a thunderstorm will get anybody wet.5 Notable in the context of environmental science is the fact that much of the belief that we are in the midst of an environmental crisis depends on computer models. The case for the sixth extinction relies on such models. The belief that the Earth is warming dangerously because of carbon dioxide produced by human beings relies on massively complex computer models known as general circulation models (GCMs). GCMs involve a simplification that is striking: In order to model climate, weather is ignored! Because the amount of physical detail involved in global weather is far too great to be handled even by present-day computers, GCM modelers are forced to ignore it in order to model climate by attending primarily to radiation balance (the balance of incoming solar radiation with outgoing infrared radiation). Although no condensation and fall of rain is modeled, the average amount of rainfall may be modeled; although no hurricane is modeled, the average number of hurricanes may be modeled; and so on. It is just plain obvious, then, that GCMs are abstractions, well and truly divorced from full reality. This important issue is discussed more fully in Case Study 7.

6.3 MODELS AND TRUTH

If there is no such thing as scientific proof, must we abandon the entire idea of scientific truth as well? Does science tell us the truth? Does it even make sense to think of science as seeking the truth?

The question is complex, and the answer is not simple. Certainly, we do not have to abandon the entire idea of truth. The concepts of truth and falsehood still apply to such simple claims as “DNA is a molecule,” “dinosaurs are extinct,” and “the water is boiling.” However, the concepts of truth and falsehood are far too simple to apply to entire scientific models. Like maps, models say a lot of things, and it is very crude to think of them as simply true or false. The simple claim that Main Street intersects Central Avenue may, for instance, be true. If the map shows Main intersecting Central, we may say that it contains this truth. But when it comes to the map as a whole, truth and falsehood are very blunt instruments of evaluation. We never say that a map is true or that it is false. Instead, we evaluate maps in terms of their accuracy, precision, completeness, clarity, and so on. For the same reasons, scientists judge their models in just such terms.

Still, a scientist will sometimes speak about theories being true—particularly in the context of public debate—and since theories really are just models, the scientist is in effect saying that a model is true. In a debate with a creationist, a biologist will say that the theory of evolution is true, not that it is a model which is accurate or complete or anything like that. For dramatic effect, he or she may even say that the theory has been proven, so it really is not a theory any more, but a fact. This sort of claim by a scientist is most generously interpreted as rhetorical overstatement. Taken literally it is deeply unscientific. Presumably it is not meant to be taken literally but as a way of emphasizing that the evolutionary model is so accurate, so precise, so complete, that we should just accept it and move on.

Ironically, this nonliteral, nonscientific use of the concept of truth is very close to the popular conception—or misconception. We nonscientists want a simple thumbs- up or thumbs-down when it comes to what we should think or believe. If, for example, after hearing all of the arguments pro and con we decide to accept the evolutionary model, we will say that it is true. If we decide to reject it, we will call it false. It is a busy world, and we do not always have time for subtleties. When we need to know, we need to know now: true or false? So we should not be surprised, then, when scientists give us a yes-or-no answer when we ask them whether a given theory is true. Still, we owe it to ourselves to remember that true-or-false is a simplification—a big simplification. Sometimes we would do ourselves a favor by taking the time to really know the model in question. In science, as in personal relations, it is wise to devote time and sensitivity if we want to achieve something lasting and truly valuable.

In practice, therefore, truth is achieved by constructing a good model, and the pursuit of truth is the pursuit of good models. Five criteria generally apply to models and provide a basis for their evaluation:

- Precision. What, exactly, does the model say? The model cannot be checked for accuracy until what it says is plain. For example, a model which predicts that a flock of ducks will migrate south in the fall is less precise than one that predicts the specific day on which it will head south. Other things being equal, we want greater precision. We want to predict not just the season, but the very day, indeed the very second, something will happen. So precision is very valuable in scientific models. In fact, precision is one of the defining characteristics of science itself.

- Accuracy. How closely does the model match observation? The distinction between precision and accuracy is not obvious and requires a little care if it is to be understood. Precision may be thought of as the target that a model sets for itself. A more precise model sets a smaller target. Accuracy concerns whether or not it hits that target when it comes to observation and measurement. A very precise model of your weight, for instance, would predict your weight to a tiny fraction of an ounce—but that would make it more difficult for the model to be accurate. The demand for precision is what makes the demand for accuracy so difficult. Precision and accuracy are thus in tension with each other—a creative tension, but tension nevertheless.

- Consistency with other successful models. Since successful models are such only because they are precise and accurate, a new model that yields new observations immediately finds itself in danger of being ruled out by well-established observation. Note that the consistency in question is empirical consistency. New models do not have to agree with established models on a theoretical level. Einstein’s model, for example, disagreed profoundly with Newtonian models on a theoretical level. What is essential is that new models agree with well-established observation and measurement.6 Other things being equal, we would prefer a model to be at least as precise and accurate as other models where they deal with the same empirical content.

- Scope. The broader the scope of a model, the better the model, other things being equal. If one map covers more ground than another, it is better in that regard. The broader the scope, the more things it applies to, and the more information it provides us about the world. So we prefer theories with broader scope to those with narrower scope.

- Simplicity. The point of scientific theories is to reduce the booming, buzzing complexity of the world, and to find the simpler patterns underlying the eternally new and rich variety of the unfolding universe, so that we can anticipate events and gain some control over our own destiny. If a theory were just as complex as the world itself, it would be of no use to us. Theories must help us understand the world, and this requires that they be simpler in themselves than the world is in itself. Simplicity is often in tension with accuracy or with scope. For example, classical mechanics is simpler than quantum mechanics, but classical mechanics also has less scope, since it does not include subatomic phenomena and is less accurate, since it asserts that atoms will collapse. We would most like a theory that is both simple in itself and broad in scope, but often we must surrender one of these theoretical virtues in exchange for the other.

- Outcompeting the alternatives. Since there is no absolute measure of scientific merit, we must rely on comparative measures. Therefore, the levels of precision, accuracy, consistency with other models, and scope that a model must obtain in order to be accepted by the scientific community depend on the levels obtained by other models in the same domain.

What these criteria presuppose is that scientific evaluation and judgment are relative. There are no answers to be found in the back of some great textbook in the sky, no angelic referee to tell us what is really true. We must instead do the best with what we have come up with on our own. So scientific models are not measured against the world itself, despite the countless idealizations of science which give that impression. Instead, models are checked against every relevant thing that we know and observe, however imperfectly, and that includes other scientific models. How much accuracy, precision, scope, or simplicity we demand is a function of how much accuracy, precision, scope, or simplicity is already achieved by other models in the same field. This relativity of scientific judgment must be kept in mind whenever we need to figure out just what to make of some new scientific claim. We must also remember that these theoretical virtues are in tension with each other, so that the choice of a theory is not a simple maximization problem, but a multiple-constraint problem.

6.4 PROTECTING SCIENCE’S VALUE NEUTRALITY

One fact that science must face in its maturity is that there is no methodological guarantee that it will not be influenced by factors that are extraneous to its goals. Put plainly, science is not immune to prejudice, where “prejudice” is understood in its literal sense: judgment in advance of, or independently of, the relevant evidence. There is always the logical and methodological possibility within science of its claims to truth (keeping in mind that “truth” is a simplification) being influenced by (or being a partial function of) factors that have no bearing on whether or not the claim is true (precise, accurate, etc.). These factors may include such things as beliefs, values, hunches, inclinations, likes, dislikes, and so on, and they are logically irrelevant in two ways: (1) they neither increase nor decrease the probability that the fact claim is true, and (2) they operate tacitly, unseen beneath the surface of scientific debate.

For example, imagine that a scientist who is a snake specialist (herpetologist) has a deep fear of snakes, due to a now-forgotten childhood incident in which he was horribly frightened by a snake that crawled into his bed. Because of this fear, the scientist is now inclined to accept the higher published ranges of the toxicity of snake venom of a given snake rather than the lower ones, a judgment that might then influence other judgments and inferences. His fear (1) has no bearing on the actual toxicity of the venom, only on his judgment about its toxicity, and (2) he has no idea that his judgment is being influenced in this way.

Scientists and philosophers have been reluctantly forced to admit that there is no logical principle or methodological dictum that does, or would, or could, immunize science against prejudice. To repeat, there is no such thing as scientific proof. That means that there is a logical gap between scientific evidence and scientific doctrine: Evidence does not entail doctrine. As a matter of methodological necessity, then, scientists must, and do, cross that gap by nonlogical means. Scientists must make a leap of faith, and that is where prejudice gets its toehold.

Ron Giere, one of the most prominent contemporary philosophers of science, put the matter bluntly in a book he published in the last year of the twentieth century: “In sum, there is little in current philosophical theories of science that supports the widespread opinion that gender bias is impossible within the legitimate practice of science” (Giere 1999, p. 212). Although Giere speaks here about gender bias, the context makes clear that different sorts of bias may affect science. Science is human through and through. It is not magical; it is not infallible; it is not perfect. There is no logical or methodological prophylaxis against prejudice.

The reaction of philosophers to this dawning realization has ranged from cynicism to idealism, and from activism to complacency.7 Nonacademic, nonscientific men and women are also aware that science changes its mind, and so feel freer to pick and choose scientific theories on the basis of their own personal views and convictions. For instance, many environmentalists think it is perfectly legitimate to accept the theory that our use of fossil fuels is causing global warming not because of its scientific merit, but because they had long before come to see the automobile as ugly, polluting, a blight on cities, and a corrupter of nature. Of course, people do have every right to make up their minds as they please, but the idea that truth is a matter of free choice is not only false but dangerous.

It is crucial at this historical juncture to rediscover the ideals that originally motivated the rise of science and inspired the Enlightenment, for they still apply. The absence of scientific proof or any logic or method to make science immune to prejudice is not a reason to abandon the battle against prejudice and the struggle for truth. To the contrary, it is reason to rejoin the battle with renewed energy and fight for pure science: seeking the truth and nothing but the truth, respecting the evidence, and guarding value neutrality. Science is arguably the most important intellectual achievement of the human species. Value neutrality is essential to science, and at this point in history where humankind prepares to shoulder its responsibilities for nature, science is needed. So value neutrality must be safeguarded and promoted. There is no special test for value neutrality that works with 100% reliability, but that does not mean that value neutrality should be abandoned as a hopeless cause. We must not throw out the baby with the bathwater. We, along with the scientific community, must demand value neutrality of its members, set a high value on it, and take steps to safeguard it.

What steps? We can begin by recognizing that science is in the business of creating models, and that these models are to be judged in terms of the criteria outlined above. Given the relativity of scientific judgment, we must encourage competition among scientific models. Just as evolutionary competition improves the fitness of organisms, so scientific competition improves the fitness of models. And just as evolutionary competition requires biodiversity, so scientific competition requires diversity among its models. So we must encourage scientific pluralism: the creation and development of a variety of competing models in every domain.

One unfortunate effect of science having assumed the social role of defining knowledge is that it has taken on the same authoritarianism that it battled against in its early days. This is quite understandable. Since science says what is to be taught in the schools and universities, what medicines we are to use, and what evidence is permissible in a court of law, it has been pressed to speak with a single voice. It has been pressured to create and maintain scientific orthodoxy. Understandable as this is, it has had some unhealthy results.

Because there is no formal process within science to decide which models to use and which to reject, it has instead come to rely upon informal “consensus,” as it is often called, to determine what is to count as scientific truth. This consensus is the product of historical accident, and wide open to prejudice. Whenever the concept of scientific consensus arises, we must remind ourselves that science has no formal procedure for establishing this consensus. No votes are ever taken, and scientific opinion is never measured. We must remind ourselves that science is not democratic. Its decision procedure—insofar as it has one—is not one person, one vote, but authoritarian: those in charge call the shots. Who is in charge? Scientific authorities emerge from the struggle to publish results, to get research funding, to get on editorial boards that control what gets published and on the committees that control research funding, to write the textbooks, to control the awarding of degrees, and so on—completely without procedural or substantive protocols. It is scientific charisma, as much as anything else, that determines scientific doctrine.

This process has worked surprisingly well. It is far from ideal, but the very idea of changing it would take us into territories and mire us in battles that this brief book cannot afford. We can, however, take some steps to make the best of the current state of play within science.

- Adopt a more mature view of science, one that accepts its fallibility as well as recognizes its achievements. Stop expecting science to determine the truth once and for all by proclamation. Expect scientific debate and more nuanced results.

- Safeguard and protect scientific value neutrality. Truth must be the first and last goal. Be wary of scientific programs and models that have commitments to values other than truth.

- Encourage competition among scientific models. Strong competition is needed if strong models are to evolve.

6.5 THE VALUE NEUTRALITY OF ENVIRONMENTAL SCIENCE

As we have seen, actual environmental science is applied science, not pure science. Its practitioners have the goal of bringing the planet to environmental health, which clearly cannot be done without some image of this target. Not being pure scientists, hence not being bound by value neutrality, they have indeed developed various images of environmental flourishing which converge on the concept of the pristine environment, the environment unaffected by human presence, the wilderness. Pure environmental science, by contrast, is value neutral and does not propound values or let its judgment be influenced by them.

We know as a simple matter of logic that every goal presupposes a value, and that no value is a matter of fact. Science deals with facts, makes no value claims (exercises topical value neutrality), and guards the value neutrality of its judgments (exercises methodological value neutrality). It follows, therefore, that pure environ- mental science cannot define environmental health, since health is evaluative. Health is an ideal, a goal that we set up for ourselves or others. This is not to say that environmental science has nothing at all to say about environmental health, because it will be the source and repository of the knowledge that is relevant both to our concept of environmental health and to the methods we choose to obtain it. Science is tasked with determining the facts, and we look to it to tell us the facts. However, its authority does not extend past the facts. When it comes to deciding what we should aim at, we have gone past the facts and into the realm of values.

William Ruddiman (2003) proposed that human beings began to cause global warm- ing some 8000 years ago, and have thereby forestalled the beginning of the coming ice age. Evidence indicates what he calls an “anomalous” rise in atmospheric carbon dioxide levels, which he argues was caused by human beings clearing forests to grow crops and raise livestock. Then methane levels began to rise 3000 years ago, which he attributes to humans flooding fields to grow rice. Carbon dioxide and methane are both greenhouse gases, and so would cause warming, which Ruddiman suggests was sufficient to forestall the next ice age. According to the generally accepted Milankovitch orbital forcing theory, the cooling that began about 5000 years ago should have continued, and we should now be on our way into the next ice. But, argues Ruddiman, our production of greenhouse gases has delayed this natural cooling.

A Thought Experiment. It should go without saying that the fate of a scientific hypothesis often does not run smooth,8 but let us, for the sake of argument, just suppose that Ruddiman’s theory is right. Let us imagine that we did cause global warming and thereby have delayed the onset of the next ice age, just as he proposes. Suppose further that environmental science (ES) reveals that we can prevent the coming ice age by keeping our release of greenhouse gases at about the same level it is today, with slight increases to counterbalance the increases in Milankovitch cooling over the coming millennia. If the ice age is prevented, Earth keeps the same sort of climate and the same sort of ecosystem that it has enjoyed over the last 6000 years. If the ice age is permitted, we will have the same sort of climate as during the last ice age and the same ecosystem shrinkage and drying as in the last one (see Figure 4.1). Under these circumstances, can ES tell us whether we should prevent the coming ice age?

Yes: Only ES can provide us with the relevant data. What happens to the environment in an ice age? We need to know or we cannot decide what to do, and once ES tells us, we can decide. So obviously, environmental science calls the shots here.

No: But according to our supposition, environmental science has already told us the relevant facts. We know what happens to the environment in an ice age: the same thing that happened last time. Temperate species are pushed south, compressed into a narrower band around the globe, the tropics cool, things become much drier, and so on.

Yes: It is not a simply matter of the facts. We also need to know whether the environment is healthier in an ice age or healthier without one.

No: Suppose that environmental science says it is healthier for the environment if we have the ice age. Even if we grant this, it does not follow that we should let the glaciers march in. That would only follow if we also grant that we ought to optimize environmental health. Without the assumption that environmental health is paramount, we are free to act on other values, such as keeping the current temperate ecosystems thriving or keeping the human species healthy and happy. More to the point, we do not have to grant that the environment is healthier with the ice age. Health is nothing other than the state that living systems should be in. It is therefore a matter of value, not fact.

Yes: We need environmental science to tell us whether meddling with ice ages will cause a worse disaster down the road.

No: We have already supposed that science has provided the relevant facts and that no disaster happens later. The point of this thought experiment is to fix the facts so that we can address questions of value without confusing them with matters of fact. We agree that science is responsible for informing us about the facts, both what will happen and what would happen under different circumstances, and that we rely on science for that information. However, the choice about what should happen is not a function of fact alone, but also of value. When it comes to value, a scientist has no more authority than anyone else.

The thesis that pure environmental science cannot define environmental health is one that practicing environmental scientists will find difficult to swallow, although its simple logic is inescapable. They will feel that they know the environment better than anyone, so they are best placed to advise us about its health. Advise, yes, but defining what is best, no. Certainly, we want to hear the advice of environmental scientists on this issue. We want to know about the unforeseen consequences of our actions, which we might later regret—as well as those we might later enjoy. These are matters of fact, after all, of the form “If you do X, then expect Y,” and about them we accept pure science’s authority. However, the question of whether we ought to avoid Y or aim for Y extends beyond that authority. We are willing to hear scientists’ opinion about what we should or should not do, in fact we welcome it, but in this they do not speak with the voice of scientific authority but as fellow human beings.

If environmental scientists find it hard to accept that their science cannot define environmental health, one reason might be that biologists are in the habit of studying the diseases of animals, which implies that disease and health are defined scientifically. This is all part of biologists’ practice of understanding life in terms of function. This is ironic, since the very concept of function involves final causation, and officially, biologists have banished final causality from their discipline. Indeed, all of modern science has done so. A final cause is one toward which things aim. For example, we are citing a final cause when we say that a cat hunts mice because it needs nourishment. Biology does not accept such explanations. Getting nourishment cannot be the cause of the hunting, since it occurs after it. The future cannot affect the past. Every biologist agrees to these well-rehearsed points.

Nevertheless, at the level of every day biological investigation and discovery, the first thing that a biologist does when he or she comes across something new and intriguing is to ask: What is its function? And the warm glow of understanding is achieved only when that function is discovered. Still, true scientific understanding is achieved only when the causal process underlying that function is revealed. The idea that the function, the intended purpose as it were, of DNA is to carry genetic information is not science. The science of DNA consists in the discovery of the causal processes whereby it replicates the proteins of parents in their offspring. Science is all about mechanism. It is not about purposes, goals, or intentions.

Function is purpose, purpose is goal, and goal is value, none of which belong to science. To identify a function of an organ is to identify a goal that the organ enables the organism to achieve: The eye enables the animal to see; the wing enables it to fly; the heart enables it to transport blood to its tissues.9 Goals are evaluative by their very nature: seeing, flying, or the circulation of the blood is good for the animal in question. To think of these functions as bits of the natural world alongside organisms, DNA, and so on, is unscientific. Nevertheless, one temptation for the environmental scientist will be to assume a function (for a specific ecosystem, say), then to fallaciously infer from this a proper function (for the ecosystem), and finally, to define health (of the ecosystem) in terms of the supposed proper function. For example, the function of the eye is to provide sight, and relative to that function the health or disease of the eye can be established. So an infection of the eye will make the eye unhealthy. It is tempting, then, for the biologist who has come this far to take the next step, which is to conclude that the eye infection is a bad thing, and so conclude a value claim solely on the basis of scientific facts.

That this is fallacious can be seen in the fact that the eye infection is a bad thing only relative to a presupposed function of the eye and the interests of the organism whose eye it is. Suppose, for instance, that the infected eye we are considering belonged to one of the 24 rabbits released by Thomas Austin in Australia in 1859, the very rabbits that went on to thrive and cause various effects on the local plants and animals that environmentalists universally reckon to have been devastating. Suppose that the microorganism that the rabbits had been infected with would have made them blind and unable to survive in the Australian countryside. Because the function of the rabbits’ eyes was impaired by the infection, it was bad for their eyes and bad for them. But from the point of view of the local plants and animals that would be saved from competition with the rabbits, the infection would have functioned as their salvation, and so would have been good—an opinion they would have shared with the microorganisms infecting the rabbits’ eyes themselves (my enemy’s enemy is my friend). Since values depend on point of view, the proper functioning of the rabbits’ eyes may be either good or bad. Functions do imply values, but only relative to a point of view.

Things only get worse when we try to infer the health of an ecosystem from its function or functions. From the point of view of the pronghorn antelope, the function of its kidneys is to cleanse its blood. But from the point of view of the plants in its terrain, the function of its kidneys is to fix nitrogen and disperse it in the soil in a form the plants can absorb. And from the point of view of the mountain lions that prey on the pronghorn, the function of their kidneys is as a nutritious snack. If we try to figure out functions eco-systemically, we are forced to make an arbitrary choice of a particular point of view within it. The ecosystem is an abstraction, and it has no point of view, even though everything that lives in it does. The ecosystem is just the present time slice of biological activity conceived (vaguely) as a system, a set of interlocking functions. Without function, there is no system. As for the land itself, it is merely the stage upon which life has acted out innumerable dramatic episodes, although it has spent most of the last few million years under hundreds of meters of ice.

6.6 THE SPECIAL CHALLENGE FACED BY PURE ENVIRONMENTAL SCIENCE

The epistemic mission of pure environmental science (PES) is to understand the biological world as a unified system. Traditionally, science has studied the world by analysis: breaking it into pieces, with designated specialists studying each piece. So far, biology has followed this plan. Biology is the study of life, but you will only find out what life is by studying all of its various aspects: cellular metabolism, reproduction, genetics, speciation, and so on. There is no generally recognized biological specialty devoted to the study of life as such. The botanist studies plants, the zoologist studies animals, the molecular biologist studies molecular processes, and so on. The goal of PES is to put all of the pieces back together again. Its identifying task is not the typical scientific task of analysis. PES is the biological specialty that aims at synthesis. If it succeeds, we will then have a specialty that does study life itself rather than simply its components. Because its job deviates from that of normal science, PES faces abnormal and extremely difficult problems. Even if environmental science succeeds in protecting its value neutrality, it still faces this special challenge not faced by the other sciences.

Science has traditionally relied on simplification and analysis in order to gain certainty, but PES aims at complexity and synthesis instead. The tradition of analysis and simplification can be illustrated by considering the case of freely falling bodies that Galileo first solved. Galileo’s genius was to recognize that the motion of actual falling bodies can be analyzed as the combination of two different mechanisms: the free fall of a body plus the resistance of the medium through which it falls. Actual falling bodies are a very mixed bag: The falling of a person to his knees is very different from the falling of a pendulum on its downward swing; the falling of a stone is very different from the falling of a feather. Galileo spotted the possibility of an underlying simplicity: The rate of fall of the person, the pendulum, the stone, and the feather would be the same if only they were not subject to different degrees and types of resistance. In a vacuum, Galileo opined, the feather would fall just as fast as the stone. Within a few decades, in 1659, the vacuum pump was built by Robert Hooke (1635–1703), and this soon led to one of the most important and persuasive experiments of the Age of Reason: direct comparison of the rate of fall of feathers and stones in a vacuum. In this classic experiment, air is pumped out of a tall glass jar, then a device simultaneously drops a feather and a lead weight, and both can be seen to fall at the same speed to the bottom of the jar.

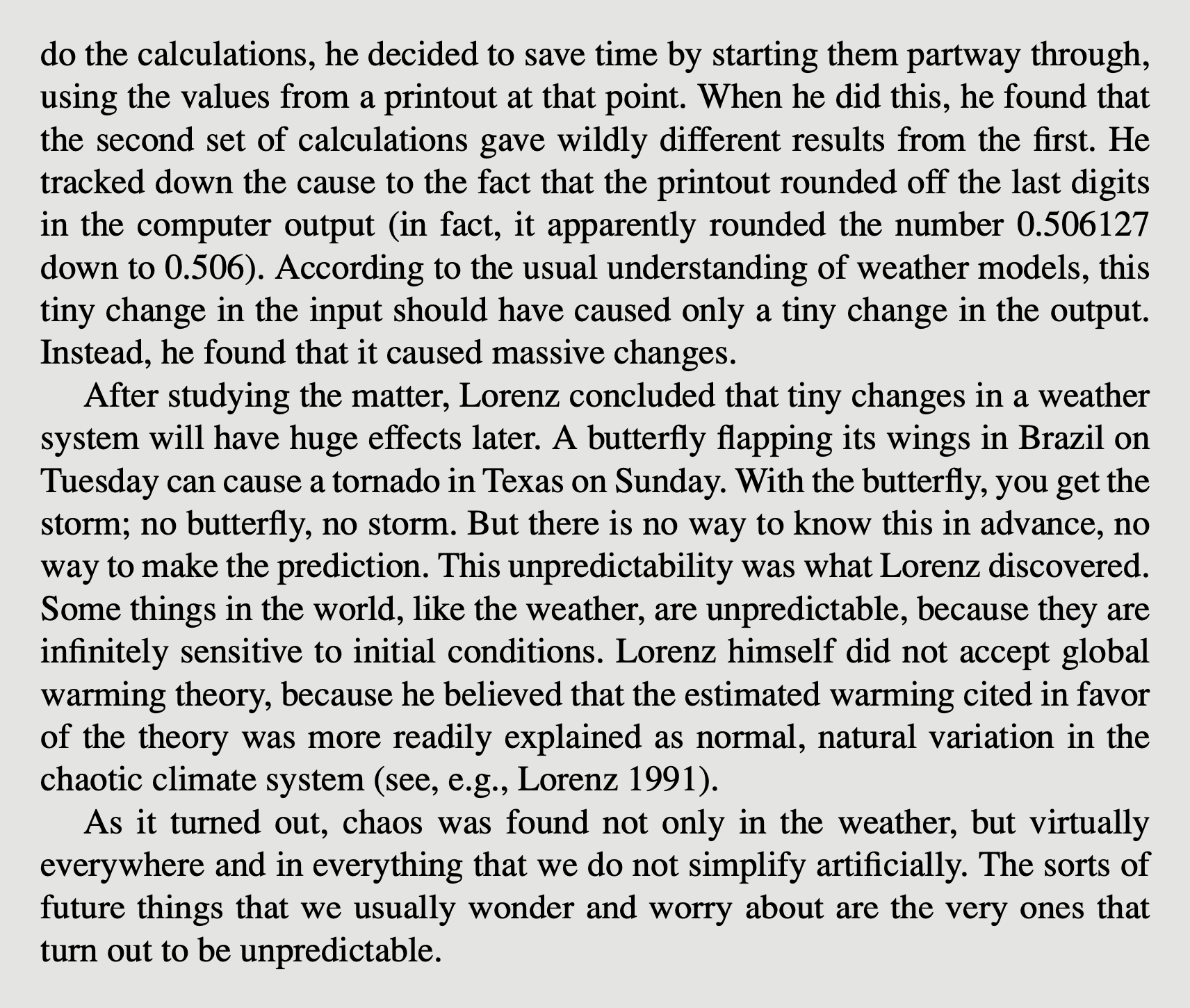

What is often forgotten is that we still cannot precisely predict the rate of fall of the feather when it is back in the air—and as far as we can tell, never will. If a feather is dropped repeatedly from the same spot several feet above the ground, it will follow different paths to the ground, require different amounts of time to get there, and stop in distinctly different positions each time it is dropped—over and over, virtually forever. The unpredictability of the feather’s trajectory is a feature of nature itself. Even if extreme care is taken to make sure that the feather is in exactly the same position each time it is released, and to make sure that the air is calm, at the same temperature, humidity, and so on, the feather will follow a different line to the ground each time. In more scientific terms, even if we begin with the same initial conditions, the state of the system evolves differently each time. But put it as you like, how can different effects issue from the same cause?

The only answer is that there are (or must be) undetectably tiny differences in the initial conditions that quickly balloon into enormous differences in the path followed by the falling feather. Some of these differences do occur at the molecular level. We know that just controlling the temperature, pressure, and stillness of the air will not eliminate the differences at the molecular level. What we call temperature is just the average energy of the molecules of air surrounding the feather. Temperature is a mathematical construct, a pure abstraction, a gross measure that we use because we know how to measure it. If we are to have any hope of precisely calculating the trajectory of the feather when it is released, we need to know, for starters, the actual energy of each of the air molecules that will collide with the feather before and during its fall. But getting precise information about these trillions of molecular collisions is a technical impossibility and will remain so for the foreseeable future. Calculating their effects poses an even more difficult problem that we are unable to solve even in principle, given that computational power is limited in the end by the sheer size of any possible computer.

The only thing to do is swallow hard and admit that as far as we can tell, we will just have to be happy with predicting the average trajectory of the feather. We have good scientific reasons for believing that this is about as good as it will ever get. The scientific phenomenon that limits the precision and accuracy of science is well studied and well understood. It is often called “infinite sensitivity to initial conditions,” or, more popularly, chaos.10 Chaos is a misnomer, since chaos implies freedom from the rule of natural law, whereas the systems in question are thought to be fully determined by law, but nevertheless, unpredictable. Unfortunately, the biological systems that PES studies are like the feather released in the air, not like the feather falling in a vacuum. They are complex systems, whereas the highly accurate models on which science has built its reputation always deal with simple systems. The essence of modeling is simplification. A model is necessarily less complex than the reality it represents. A map cannot contain the complexity of the real terrain, and a model of the atmosphere running in a computer the size of a box cannot contain the endless details of the massively larger and more complex atmosphere itself. Since the behavior of the atmosphere depends very sensitively on just those details, no model can predict its behavior.

Science has gained its reputation for precise prediction through its success with purposely simplified systems. The natural systems that science has been able to predict with precision and accuracy have been in the heavens, not on Earth. The solar system is a naturally occurring simplified system. Sunrise, sunset, and eclipses of the Sun or Moon can be predicted to the second months or years in advance. But when it comes to earthly phenomena, precision and accuracy have been restricted to cases where we have created simplicity in the lab or in the computer. The behavior of ordinary, complex systems that are found in the real world outside the laboratory has largely remained outside the scientific domain. The discovery of chaos and the development of chaos theory shows that this struggle will, as far as we now can tell, never be won. Approximation and compromise are the best we can hope for.

As Nancy Cartwright (1999) puts it, we live in a dappled world: a world that, despite science, is still full of possibilities, surprising events, and wonders that we have not yet appreciated. Science rules in worlds of its own making, the world of the fundamental simple forces and processes where physics and chemistry prevail. On the laboratory bench and in the technological innovations it has made possible, science has achieved levels of precision that are absolutely stunning. This precision itself is an excellent reason to accept that nature is lawlike throughout, not just on the lab bench. Presumably the laws of physics and chemistry and all the physical sciences rule everywhere, but even so, that does not permit the rest of the world to be predicted by science. In the perfect vacuum of the bell jar, the perfect mechanics of Newton prevails, but outside the bell jar, feathers and leaves and raindrops fall to Earth, tracing paths no human being can predict. In the perfect vacuum of the particle accelerator, quantum mechanics and relativity prevail, but outside it the weather takes its own unpredictable course here on Earth as on the Sun and a thousand other planets and suns. Thus, the world will continue to surprise us. To bring this discussion of chaos back down to Earth, life itself is not predictable. We will run into friends that we never thought we would meet again11 and make new friends whose identities must remain a mystery to us until then.

On September 11, 2007, the sixth anniversary of the attacks on the World Trade Center and the Pentagon, Osama bin Laden spoke out against global warming. This marks a triumph for environmentalism, for it shows that it has become truly global. Although Osama has very few beliefs in common with my neighbors and friends, he does share with them a concern for the health of the planet. Osama bin Laden, Al Gore, Pope Benedict, Bill Gates, Noam Chomsky, Madonna, the Dalai Lama, George Bush, Vladimir Putin, and the National Chief of the Canadian Assembly of First Nations, Phil Fontaine, are a diverse group of human leaders. Yet they all have one thing in common: They believe (or believe in) global warming. The global warming theory (GWT) is arguably the first truly global news story, one that reaches not only everyone’s ears but also their hearts and pocketbooks. The entire human race has been told that the centuries-long party of economic growth is over, and now the CO₂ bill has to be paid. Sure, there were stories of global interest before, especially those predicting nuclear apocalypse a generation ago, but those were only warnings of things to come. Global warming, we are told, is happening right now, and must be stopped by reducing CO₂ emissions right now.

Whatever we human beings do in response to the threat of global warming, it will be momentous for the environment, and momentous for you and me. Even if we do not live to see the days when the threat is supposed to be realized, we are charged with making up the collective mind of the human race today. We cannot address environmentalism today without addressing global warming.12

The Threat Forecast by Global Warming Theory. The International Panel on Climate Change (IPCC) officially states in its latest, and fourth, assessment report that nearly every one of the last several years is among the several hottest in 1000 years or more. It also states that the warming is caused by us, and that it cannot be stopped, only mitigated. Even if CO₂ emissions are totally eliminated by 2100, the warming will last for thousands of years (AR4, pp. 77–80).13 According to the press releases of the IPCC, the result will be an ecological catastrophe—the realization of the environmental apocalypse that has been forewarned, and feared, since the 1960s.14 Therefore, we must begin scaling back the use of fossil fuels as quickly as possible in order to limit the damage: reduce CO₂ emissions to 5% below 1990 levels by 2010, to 50% below 1990 levels by 2050, with 100% reduction (total elimination) of CO₂ emissions by 2100.15

The Threat of Global Depression. Why have we not met these reduction targets? Surely if it were easy to do, we would have done it. The problem is that the human economy runs on energy, and the majority of this energy comes from fire and hence produces CO₂. Meeting the first of the Kyoto targets would have caused a massive global depression. Since emissions have grown steadily since 1990, meeting the first target would now require a global cut of over 20% of current emissions, which cannot be done without massive disruption of every sector of the economy, including food production. In short, human beings do not know how to obtain the necessities of life without the burning of fossil fuels. So the first target cannot and will not be met. The IPCC nevertheless insists loudly and resolutely that we must start making cuts to CO₂ emissions. This cannot be done without putting the brakes on the global economy. We all know that it hurts when the economy slows down: We work less, we spend less, we travel less, we buy fewer clothes, fewer books, cheaper foods, give less to charity—we live less. The IPCC itself says “it is clear that the future impacts of climate change are dependent not only on the rate of climate change, but also on the future social, economic and technological state of the world” (op. cit., p. 824). This is, of course, perfectly true. It is something that we all have learned from our own personal experience.

A simple, inconvenient truth is forgotten by those who call for cuts to CO₂ emissions: The economy is nothing other than the sum total of the ways in which we human beings make a living. It includes everyone: laborers, hockey players, professors, farmers, industrialists, native herders, astronauts, environmental pundits, and priests. The word, economy, conjures up the image of money, but money is merely the counter in the social system that we have developed to help us make our living. Food, clothing, and shelter are the main business of the economy, and when business is bad, they are just that much harder to get. Our economy is integrated with our bodily metabolism in the same way that the business of bees, gathering honey, is integrated with their bodily metabolism. Bees have an economy, too: the economy of the hive. In the bee economy, worker bees build hives and honeycombs, gather honey and store it in the honeycombs, and then distribute it among themselves and their offspring. In the human economy we humans build houses and storage elevators, plant fields and gather food, and then distribute it among ourselves and our offspring. But unlike the bees, our methods of doing all of this have evolved so that they now depend on the use of fire. At the moment, we cannot survive without fire. It is conceivable that we can gradually transform our economy, but we do not know how to do that right now, today. If we cut CO₂ emissions, we will reduce overall economic activity—in other words, cause an economic depression.16

It may seem odd that the ones who are first to suffer from a downturn in the economy are those who seem farthest from it, those who have the least to do with money, the rural poor who eke out a living tending a garden and a few chickens. It is true, nevertheless. If we remember that “the economy” is just another term for humankind making its living, it will not seem so odd: When it gets harder in general to make a living, those who just barely make a living will be hurt most. The poor need to buy the things they cannot or do not make for themselves, such things as matches, soap, toilet paper, needles, thread, fabric, eyeglasses, toothbrushes, pots, pans, shovels, plows, books, shoes, medicines—the countless “little” things that make life possible and tolerable. When the economy goes bad, these things become more expensive, the money to pay for them becomes scarcer, and the poor must suffer doing without, the worst form of economic hardship.

Because global warming pits the good of the environment against the good of the economy, it threatens to hit us all where it hurts, and where it hurts the poor most. Of course, the impression created by those who profess and promote the Kyoto Accords is that the only ones to be hurt will be the rich, greedy people at the heart of the problem in the first place. This is, as anyone with any experience of things on this Earth knows, false. The rich, as always, will do best no matter what circumstances prevail. The poor themselves will testify that they suffer most during hard times.

The Question. The IPCC presents humankind with the following argument:

- Premise: GWT is true.

- Conclusion: Therefore, we must reduce CO₂ emissions.

Thus, humankind faces two questions. First, the factual question: Is GWT true? Second the value question: Should we reduce CO₂ emissions if GWT is true? Here we address only the first question, the factual question, with the understanding that what is at stake is the rest of the IPCC argument. Thus, our question may be restated as follows: Are we sufficiently confident in the truth of GWT to slow the human economy because of it?

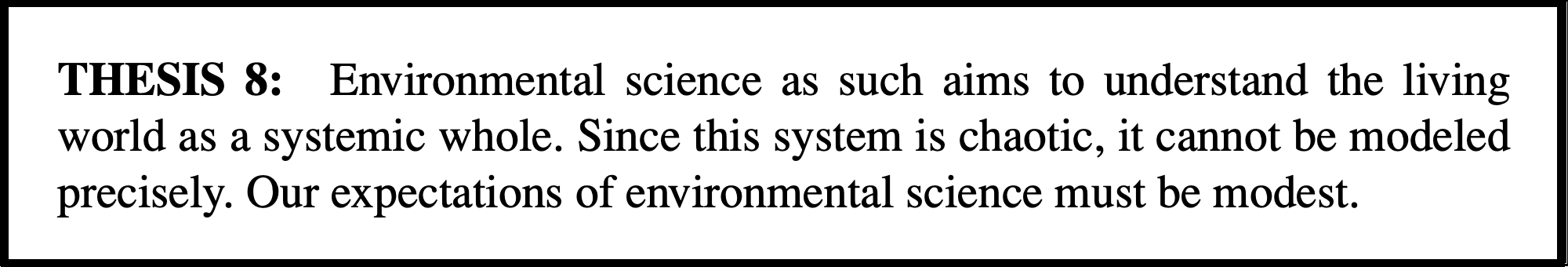

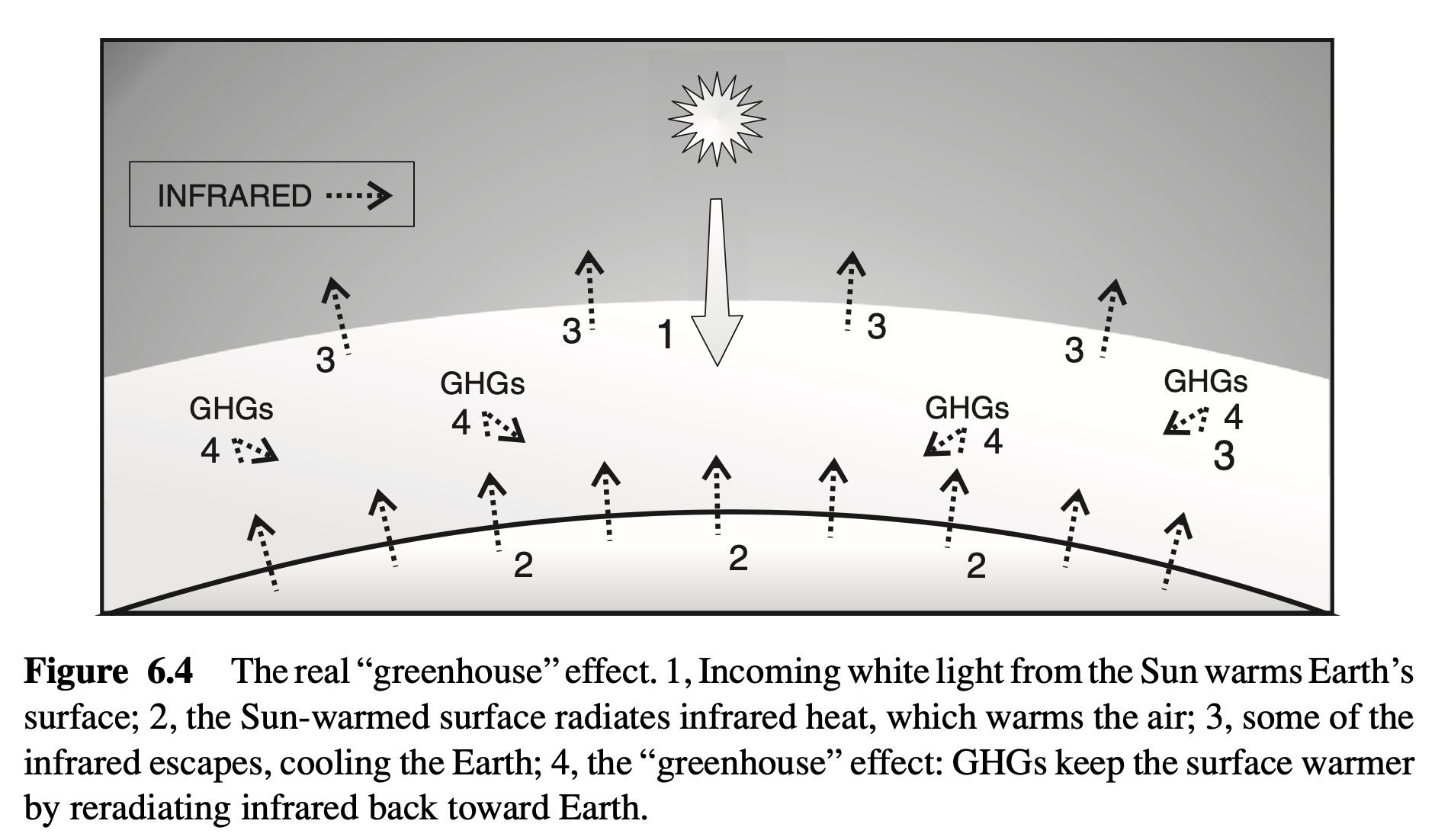

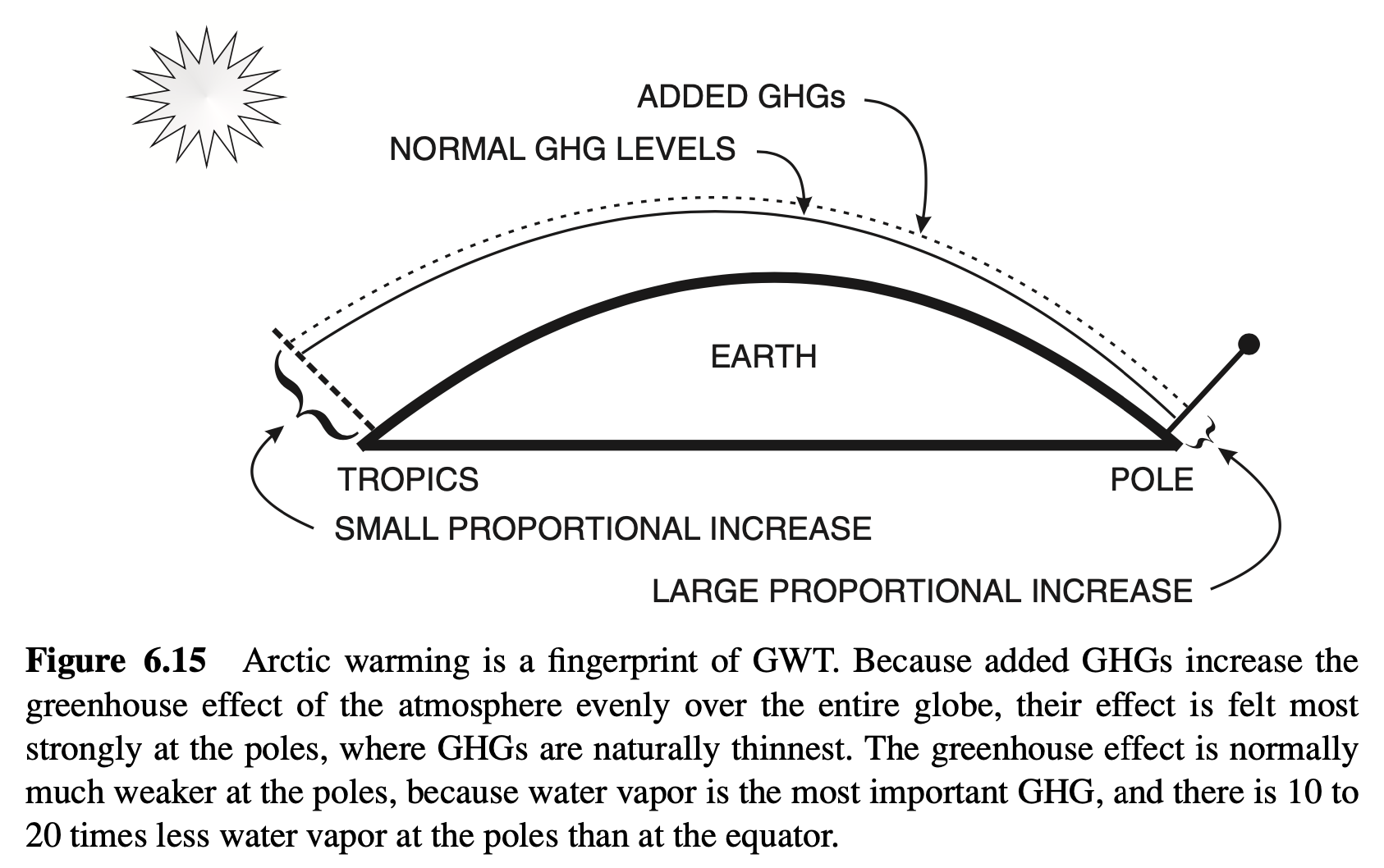

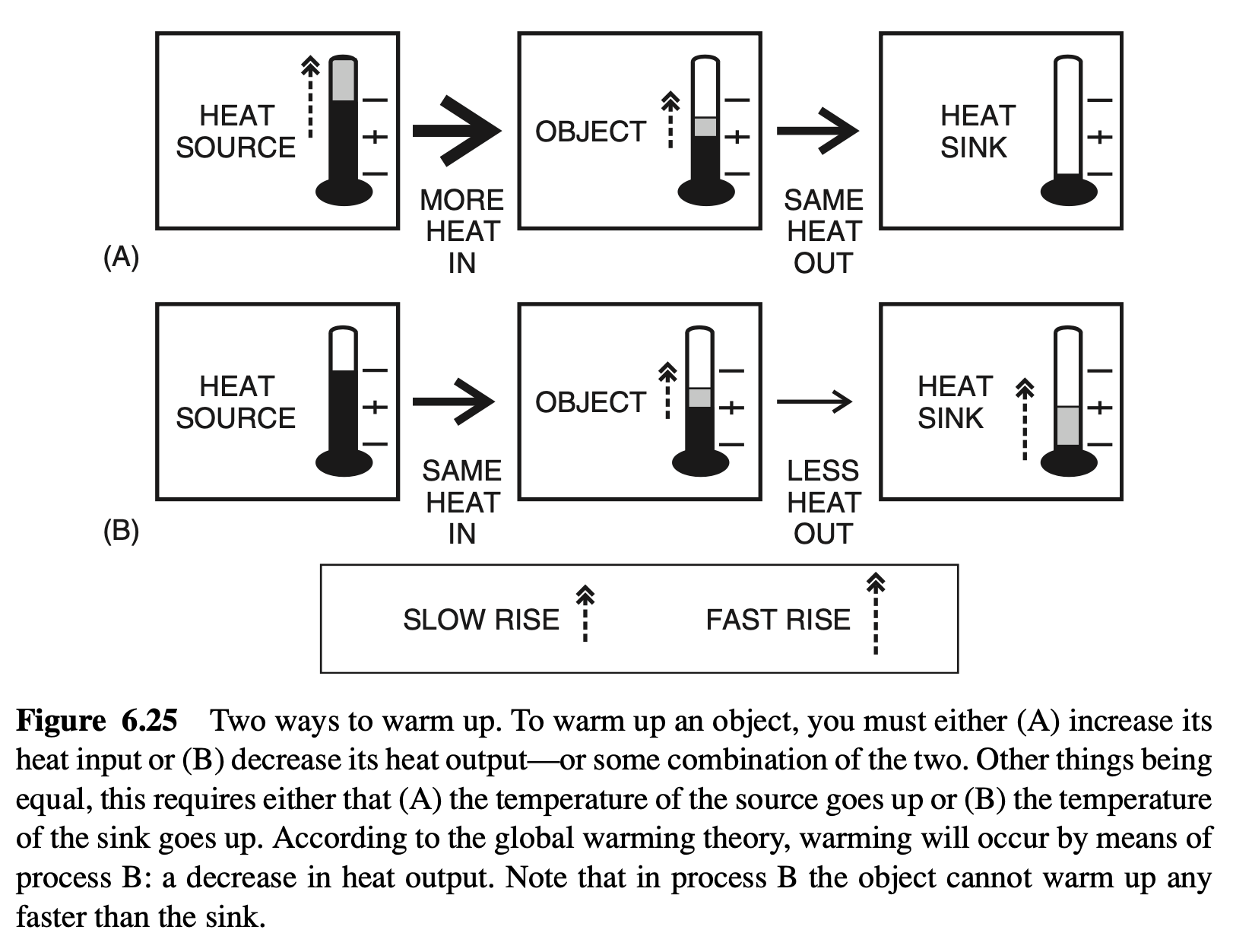

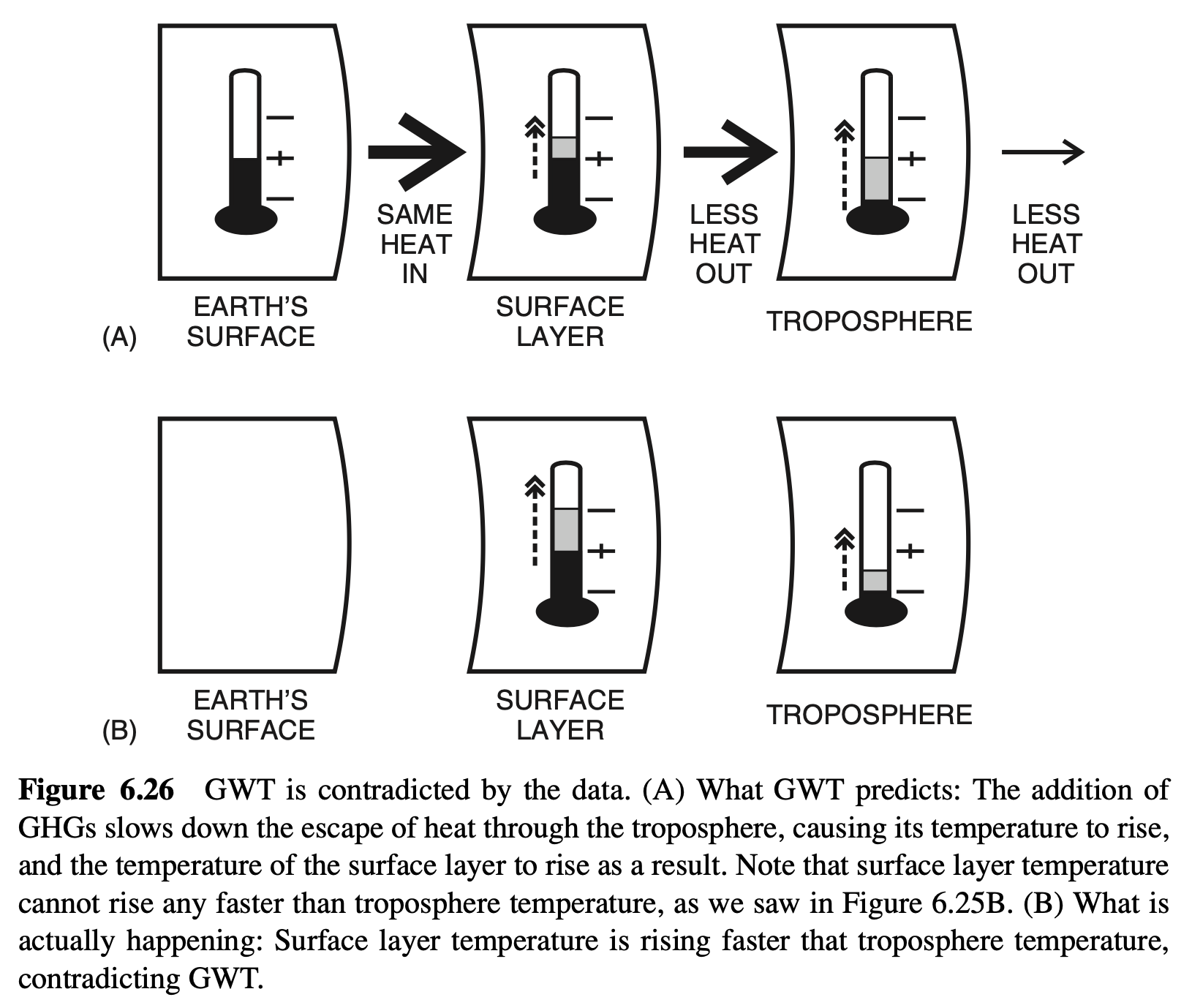

Yes 1: The Greenhouse Argument Proves Global Warming Theory. The green-house gases (GHGs) we produce are like the glass walls of a greenhouse: They let in the heat from the Sun while preventing its escape, as shown in Figure 6.1. There is a

natural level of CO₂ that keeps the temperature where it should be. We have disturbed this natural level by using fossil fuels and thus have disturbed the temperature by making the glass in the greenhouse thicker. This “runaway greenhouse heating” means that Earth is headed for ever higher temperatures and environmental disaster.

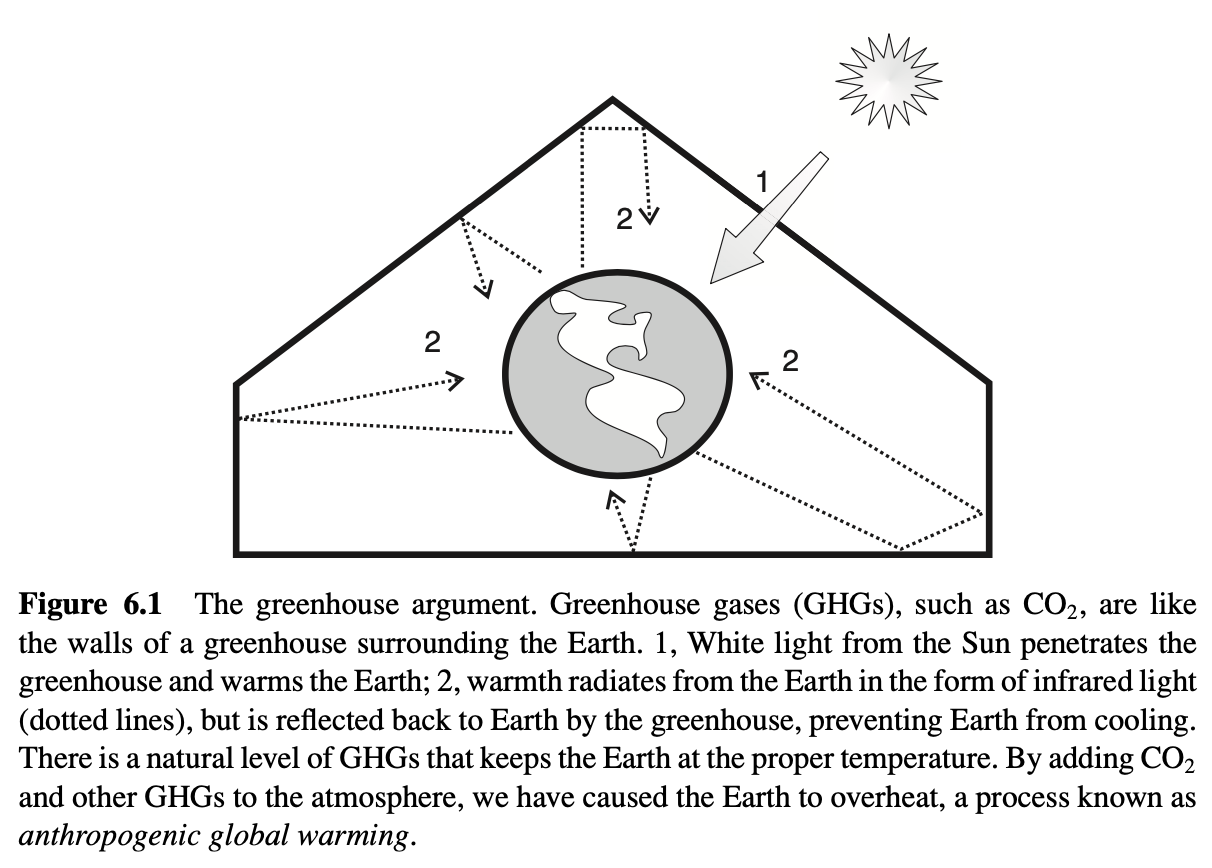

No 1: TheGreenhouse“Argument ”Is Merely a Misleading Metaphor. No doubt the political persuasiveness of popular GWT turns on the fact that everyone knows that greenhouses, like automobiles, get very warm in the Sun—even though in the greenhouse metaphor the actual mechanism of this warming is misrepresented. Although it is true that glass is more transparent to visible light than to infrared, this radiation effect is inconsequential in an actual greenhouse. Actual greenhouses work by interrupting air circulation, as shown in Figure 6.2A. If the greenhouse does not trap warm air inside, it is no warmer inside than out, as shown in Figure 6.2B. Greenhouse operators know this, since they cool their greenhouses by opening vents that permit warmed air to escape, although this has very little effect on the radiation balance within the greenhouse.17 So, despite the fact that radiation is still trapped as much as it ever was, the greenhouse cools. In an actual greenhouse, the warming of air by infrared radiation causes air motion, just as it does in the atmosphere outside. Convection is a natural engine that is powered by infrared light, converting heat into motion. This engine normally transports heat upward and away. A greenhouse gets warmer because it prevents this natural engine from working. Since it is false that GHGs prevent convection, the greenhouse argument simply fails to apply to the actual atmosphere.

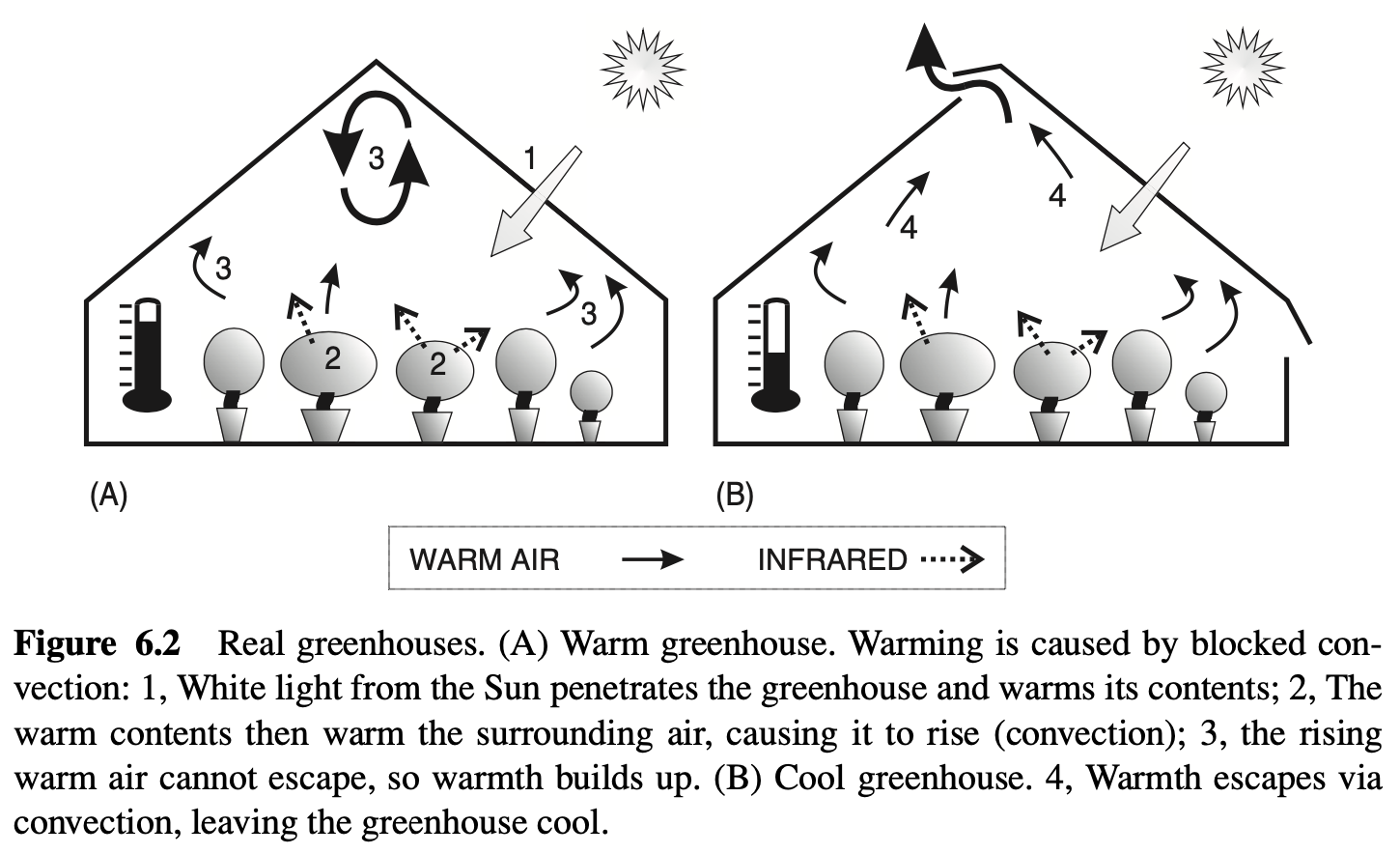

In the actual atmosphere, incoming white light from the Sun does not directly heat our atmosphere, which is nearly transparent to white light.18 Instead, our planetary warmth begins at the surface when the visible light of the Sun is absorbed by oceans, ground, buildings, trees, and so on, and warms them, as shown in Figure 6.3.Warm things radiate heat: They glow. We can see a red-hot nail glow, but warm rocks, trees, buildings, even our own bodies, glow too, only in a milder way, that we cannot see with the naked eye, in the infrared. In this way, Earth’s surface warmth is radiated as infrared light into the overlying atmosphere. Most of this infrared radiation is absorbed, since the lower atmosphere is nearly opaque to infrared light.19

The surface layer of atmosphere would become too hot were it not for convection, the main engine of global cooling and precisely what is left out of the greenhouse argument. Warm air expands, gets lighter (weighs less per unit of volume), and so rises (or is buoyed upward by the heavier air around it). When warm air rises because of convection, the air around it moves in to replace it, causing wind. The moving atmosphere interacts with the land, the waters, the vegetation, the mountains, the glaciers, and so on, both picking up and losing such things as dust, gases, heat, and water vapor. The rising air stirs this moisture and heat, creating rain, storms, waves, hurricanes, thunder, and lightning—all of the ongoing drama that we call weather. In the process, the heat that began at the surface makes its way to the upper atmosphere, where it can escape as infrared radiation into outer space. So the real atmosphere is a bit like the cool greenhouse from Figure 6.2B, but nothing at all like a closed greenhouse. Thus, the greenhouse argument simply does not apply.

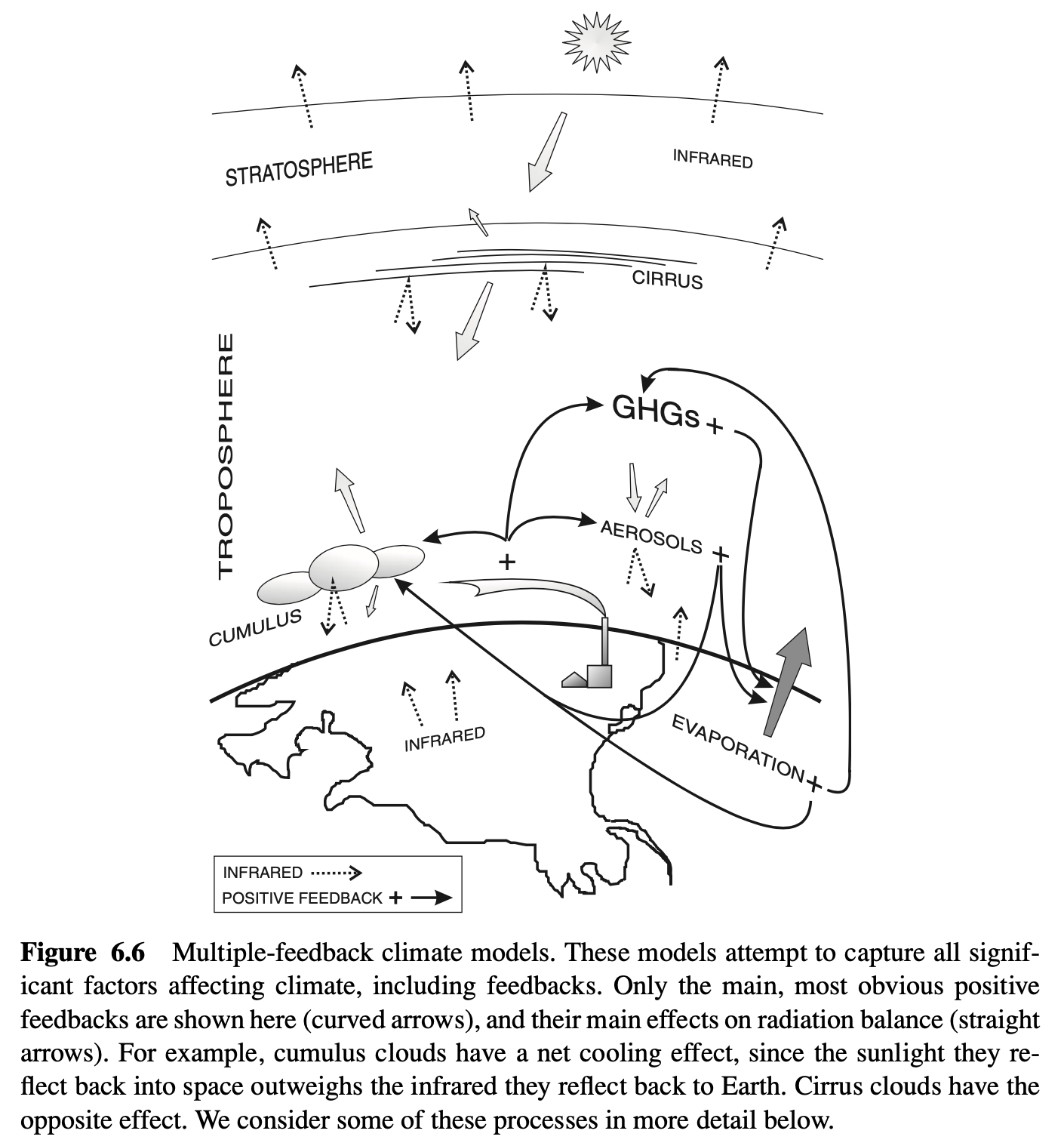

Yes 2: The Anthropogenic Forcing Argument. The greenhouse argument is, admittedly, a simplification, but it does get to the heart of the matter. Of course, the climate is complex, just as the last objection states—in fact, its sketch of the real climate barely scratches the surface. That is why we rely on sophisticated climate models to tell us what the climate will do. There simply is no way that the human mind can completely comprehend a system as chaotic as the climate. It is just too complex. In addition to the factors you mention, it also involves more complex things, such as latent heat exchanges when water is vaporized or condenses, not to mention aerosols such as sulfates, smoke, and just plain old dust, to mention just their main varieties. These models include convection, the main concern of the previous objection. Nothing that makes any difference at all to climate is left out of these models. In fact, GWT employs a hierarchy of models, some devoted to a single phenomenon such as convection or air–ocean heat transfers, and some that put all of the pieces together again so that we can see the big picture.20 In these models the currency of the realm is radiation. All of Earth’s climate energy comes to the planet as radiation and leaves as radiation. Convection, aerosols, GHGs, and so on, are relevant to climate only insofar as they affect the radiation balance of the planet. So all of these things are reduced to a set of parameters quantifying their radiation effects; this method is called parameterization.21

One of the things that climate models tell us is that the overall effect of GHGs is to reflect, or reemit, infrared radiation back down toward Earth’s surface. That is the kernel of truth that is captured in the greenhouse argument, despite its simplifications. Indeed, that is its whole point: to make the role of GHGs plain. When scientists speak about the greenhouse effect (or greenhouse warming), they are talking about this overall warming effect of GHGs, as shown in Figure 6.4. Other things being equal, adding GHGs to the atmosphere slows heat’s escape. To state it in a homely way, additional GHGs cause heat in its infrared form to bounce around more inside the atmosphere before it escapes into space, increasing the heat it contains and thus raising

its temperature. This effect, known popularly as the greenhouse effect, is called anthropogenic forcing when human activities are the source of the added GHGs, and has been calculated precisely.22

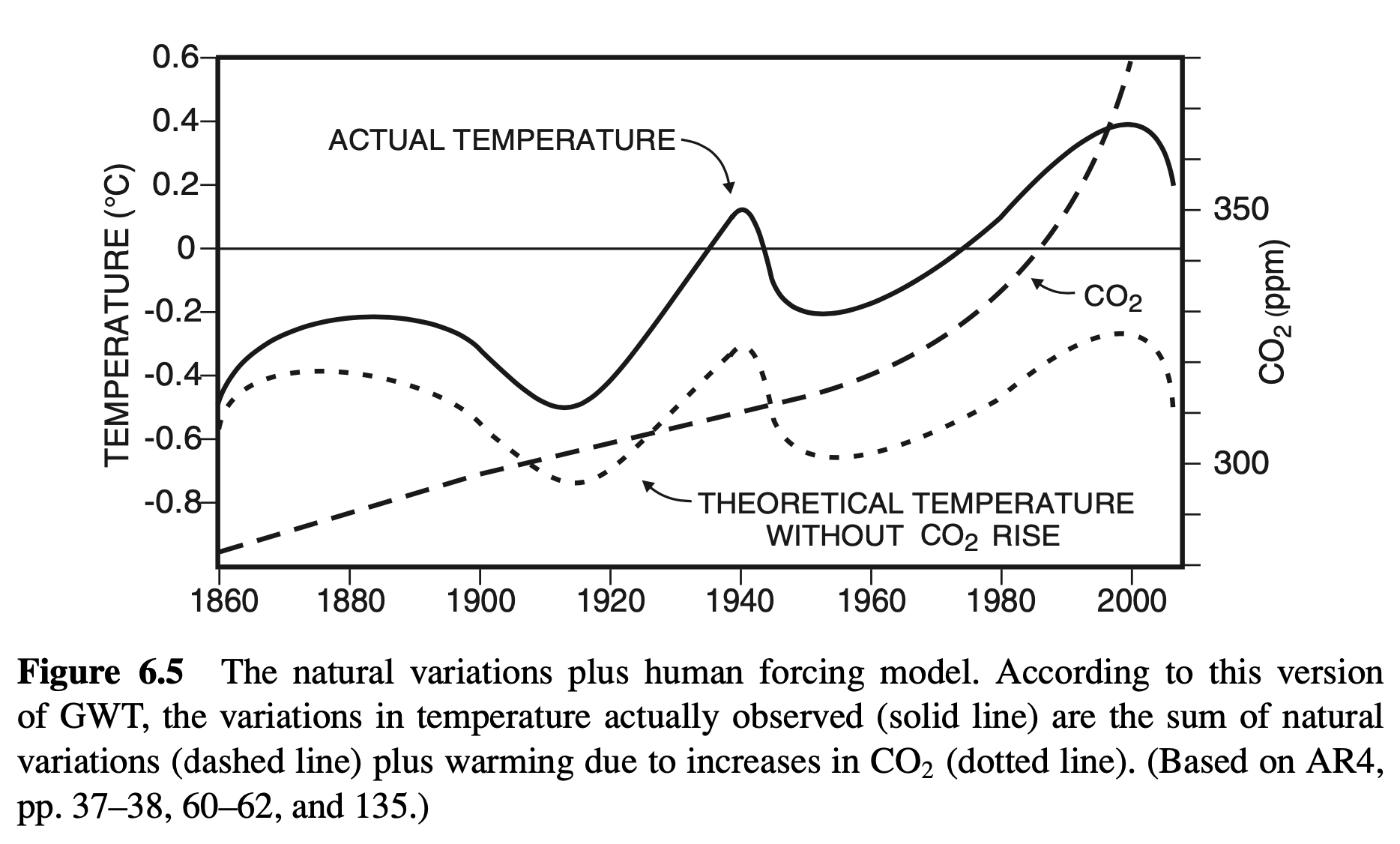

Thus, GWT is the claim that part of the temperature rise we have observed since 1750 is due to global warming. Since global temperature is varying continually anyway due to natural variations, we can think of it as the sum of natural variation plus human forcing, which gives us the NV + HF model of GWT, shown in Figure 6.5.

No 2: GWT Requires a Predictive Model of Climate as a Whole. Now that the complexity of the problem is out in the open, we have to realize that unless we know what the climate would have been like given natural variations, we can only assume that a specific portion of the current temperature is due to human forcing by GHGs. No matter what the temperature is, or whether it is going up or down, it can always be claimed that some portion of it is due to human forcing. The only way that actual temperature data can be used in support of the NV + HF model of GWT is to have independent calculations of both natural variations (NVs) and human forcing (HF). Without independent calculations for both, GWT fails to say anything specific or precise about what the temperature will be, so the question of whether it is true simply cannot be answered. To put it another way, until we know what the natural variations will be, we do not know what the NV + HF model is saying. The NV + HF model is oversimplified. It is an efficient way to explain GWT to the climatologically unschooled—and to persuade them to believe it—but it is too vague to be tested or to serve as a real scientific hypothesis. Unless we know what the climate would have done without human forcing, we cannot measure the effects of human forcing. That this is so is reflected in the fact that officially, at least, IPCC climate scientists do not use the NV + HF model as part of the scientific basis for GWT.23 Instead, they try to explain past climates, including even paleoclimates, to show that their climate models are up to the task of predicting warming over the coming centuries. However, it is not at all clear that their models are capable of predicting—or retro-dicting, or even accommodating—past climates. The anthropogenic forcing model simply ignores the necessity for accurate prediction of past and current climate—and the huge, unsolved problems that this entails for GWT.

Yes 3: Multiple Feedback Models Prove GWT. Admittedly, the NV + HF model is simplified, although at its core there is a kernel of truth: that human GHG forcing will inevitably warm the climate. However, the criticism that we must be able to model what the climate would have been without human forcing is recognized by the IPCC. To do this, the IPCC uses the most sophisticated climate models in existence, multiple feedback models (MFMs). MFMs incorporate all the significant factors that influence climate, recognizing that these factors interact with each other in complex ways, sometimes reinforcing each other (positive feedback), sometimes weakening each other (negative feedback). A rough idea of MFMs is given in Figure 6.6. MFMs enable us to provide what you call for: independent calculations of both natural variations (NVs) and human forcing (HF). Indeed, the IPCC’s official argument in favor of GWT turns on comparing models of natural variations in temperature with models that also

include anthropogenic forcing.24 When the two sorts of models are compared, it is obvious that the actual rise of global temperature since 1970 or so cannot be explained except by GWT.

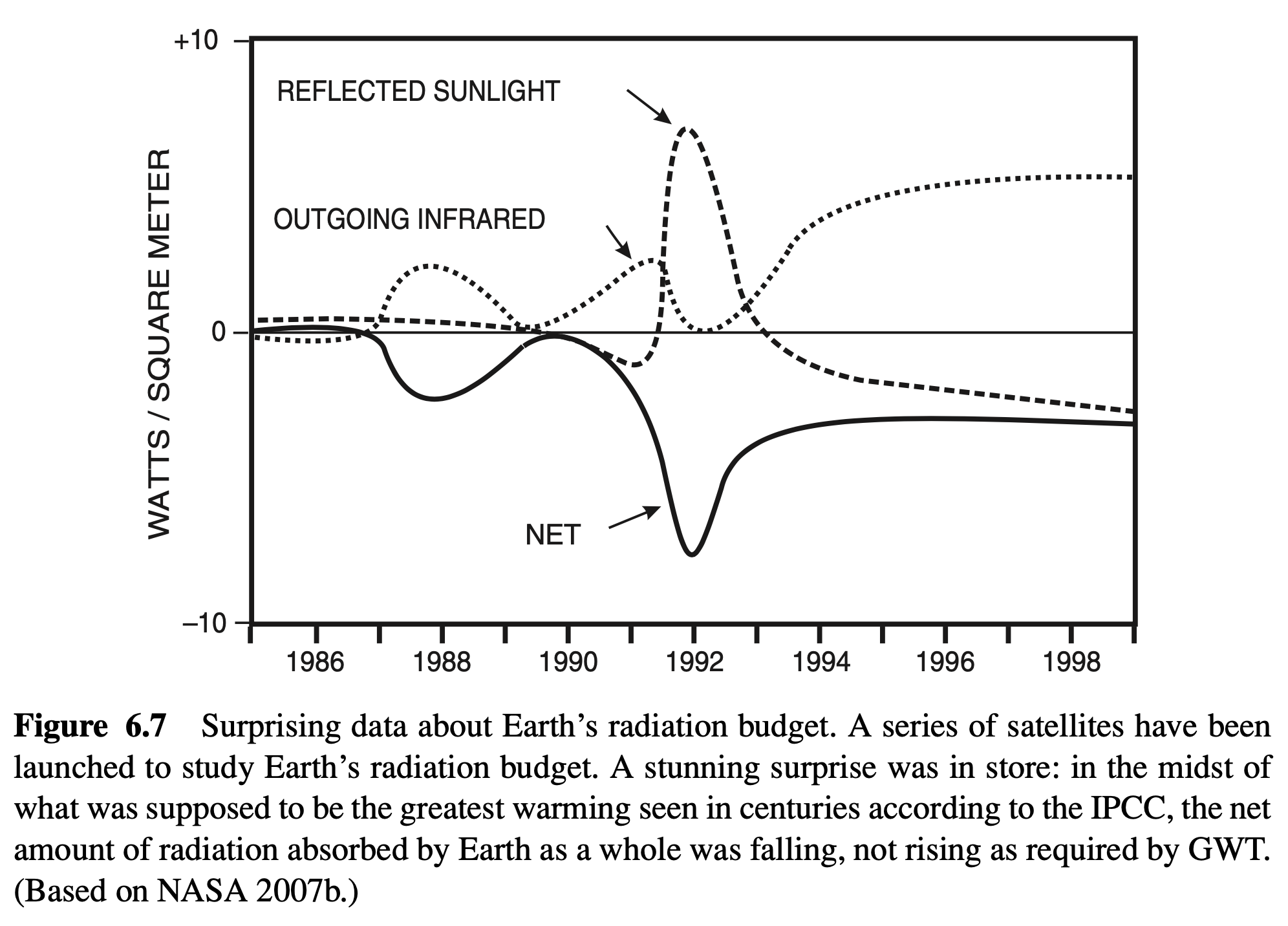

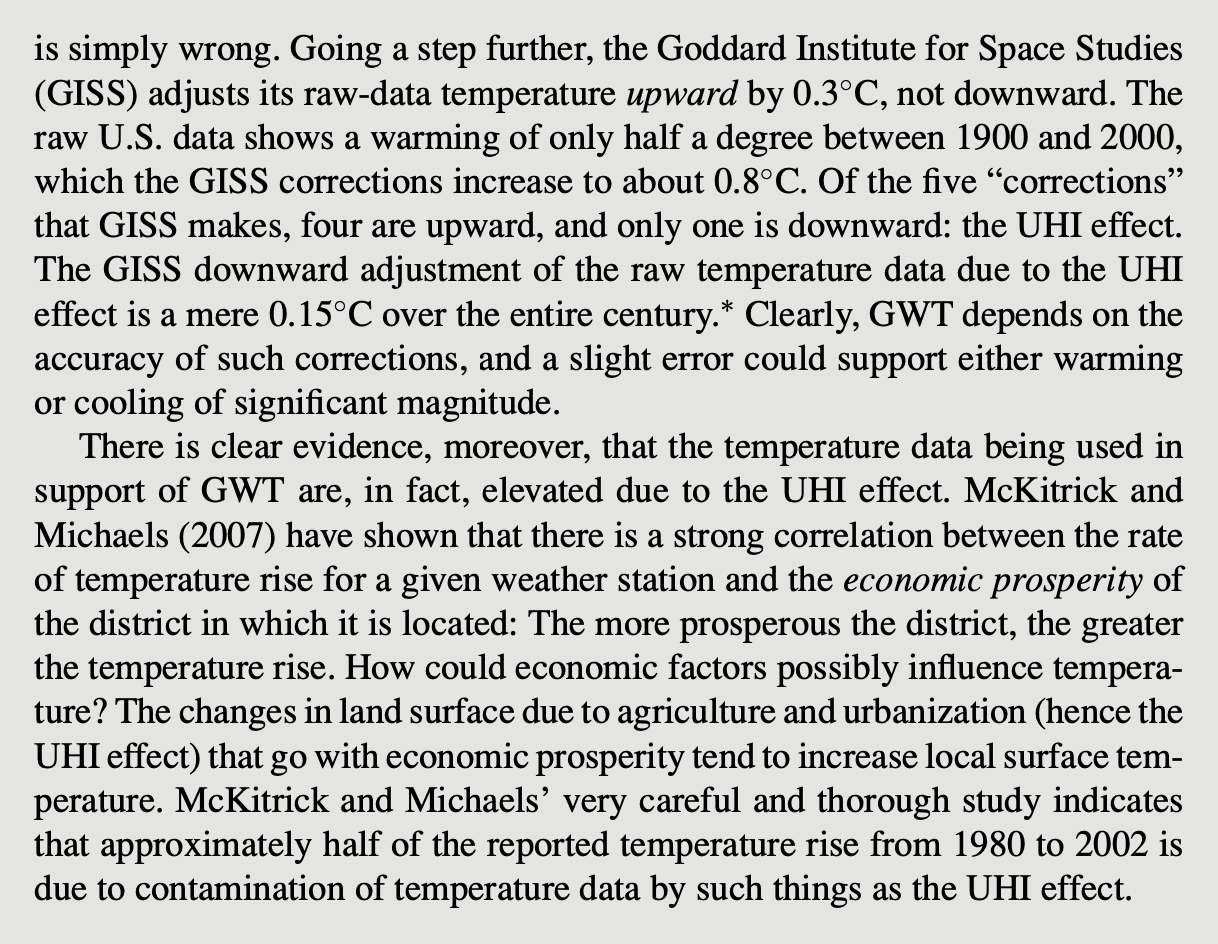

No 3: Earth’s Radiation Balance Contradicts GWT. Once it is granted that we must use MFMs, it is granted that nothing less than an adequate model of the entire climate system is required by GWT, and we simply do not have that level of understanding at this point in history. Every climate scientist, including the authors of the IPCC assessment reports, admit to significant gaps in data, theory, and modeling capacity that systematically undermine our confidence in GWT. For example, just recently, data emerged that contradicted the basic premise of GWT. All three models of GWT, the greenhouse model, the anthropogenic forcing model, and the multiple

feedback model, have the same core thesis: that Earth’s outgoing infrared heat has been decreasing, and this has caused temperatures to rise. It should come as quite a shock to GWT supporters, then, that Earth’s radiation balance falsifies this thesis. Recent data show an increase in the amount of heat radiated from Earth into outer space (e.g., Chen et al. 2002, Hartmann 2002, Wielecki et al. 2002): “Satellite observations suggest that the thermal radiation emitted by Earth to space increased by more than 5 watts per square meter, while reflected sunlight decreased by less than 2 watts per square meter, in the tropics over the period 1985–2000, with most of the increase occurring after 1990” (Chen et al. 2002, p. 838). In other words, over the all-important tropics, at least, there is an increase in the solar radiation reaching Earth which is overwhelmed by an increase in the heat escaping Earth, for a net increase in outgoing radiation,25 as diagrammed in Figure 6.7.26

This result took the entire climatological community by surprise, which is a nice indicator of the current state of climatological science. With all due respect to the brilliant work being done by numerous climate scientists, climatology is still a young science that has a long way to go. A fast rise in temperature occurred precisely during the increase in outgoing radiation, precisely the opposite of what climatologists would expect and precisely the opposite of what GWT requires. When it comes to pure science, such unexpected results are actually very inspiring, since they shake up presuppositions and lead to new insights, new ideas, and new approaches. From the point of view of the IPCC, however, this result can only be problematic, because it shows just how little we understand about Earth’s complex, indeed chaotic, climate. Pure climatological scientists have been inspired to reassess Earth’s various modes of heat storage. One explanation of the rise in surface temperatures at the very same time that outgoing radiation increases would be a release of heat from the oceans. This, in turn, might help explain why temperatures have been falling over the last decade (since 1996), in contradiction to what GWT predicts.27

In any case, GWT is based on the premise that because of human GHGs, the net radiation balance for the planet is positive. Unfortunately, this has been shown to be false during the very period when the most global warming is supposed to have occurred. GWT provides neither explanation nor prediction of global warming when this premise is false. This means that the period of warming in question is not evidence in favor of GWT. Whatever the causal mechanism of the warming may have been, it was not the one outlined in GWT. A period of warming that is always cited as strongly indicative of GWT, from 1985 to 2000, occurred during a climate phase in which the Earth was losing heat, not gaining heat, as GWT requires.

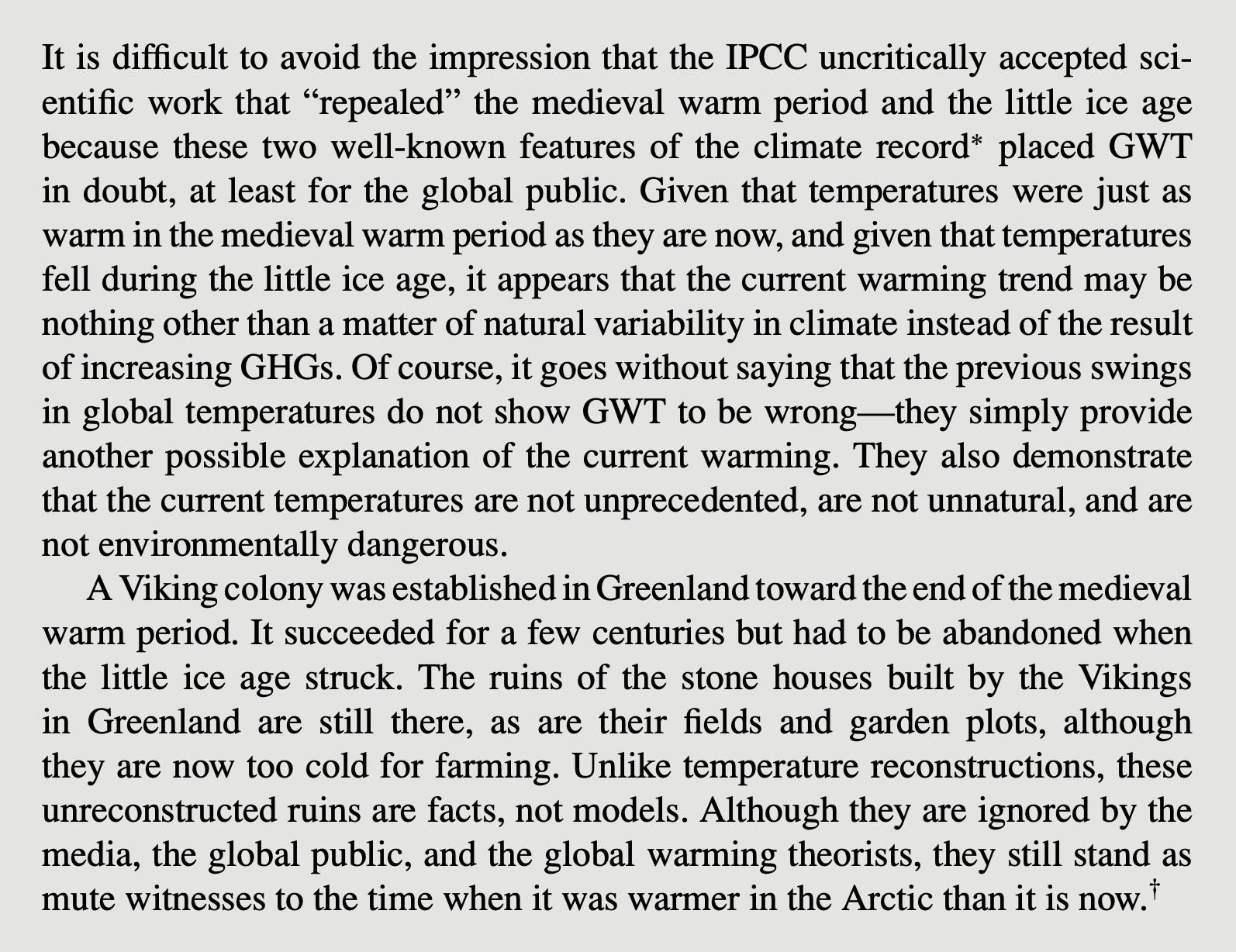

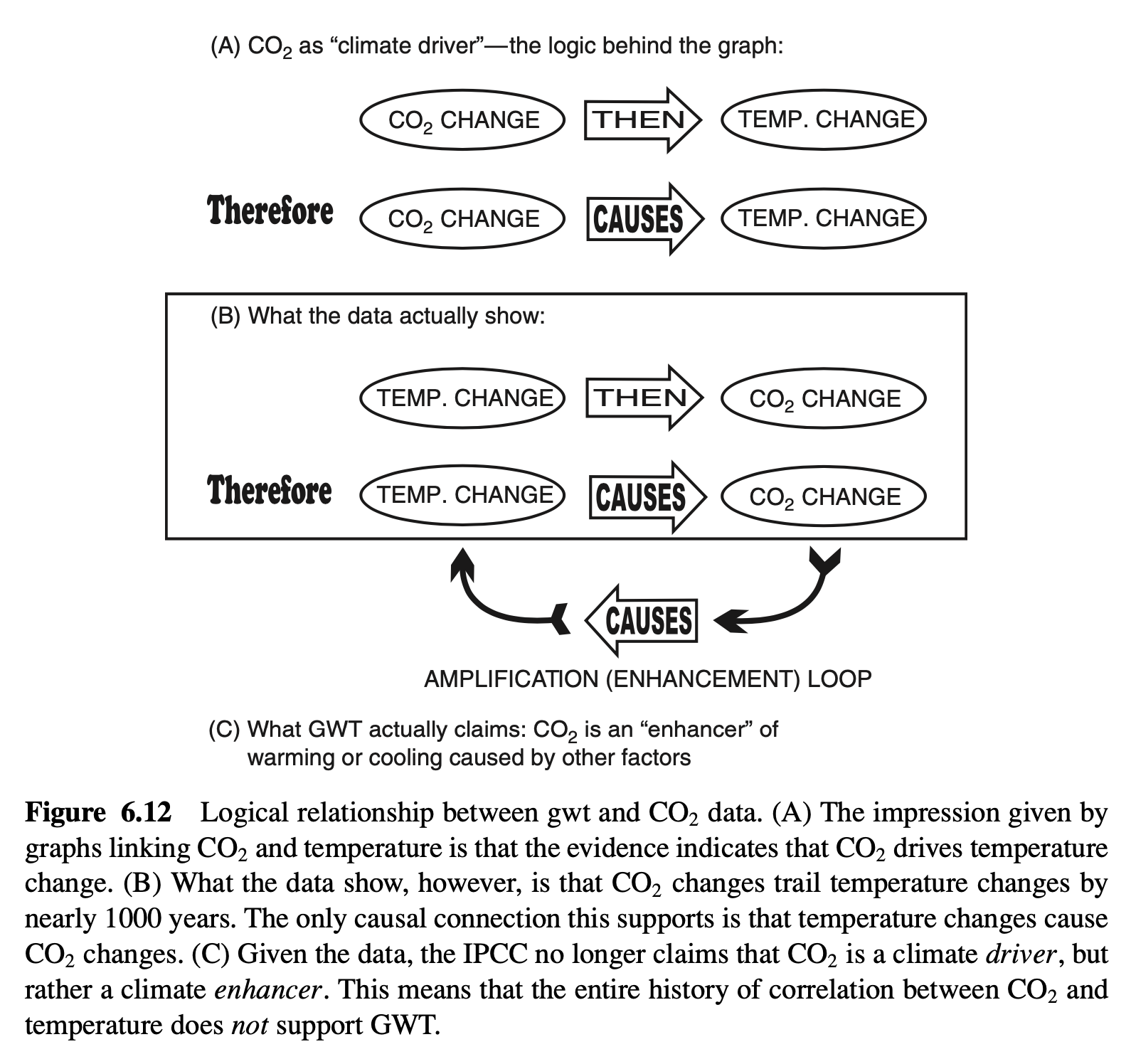

Yes 4: The Link between CO₂ and Temperature. The case for GWT does not rest on multiple feedback models alone. There is a body of evidence that supports GWT directly, regardless of whether MFMs work or not: the correlation between CO₂ and temperature. The strength of this argument is obvious once the data are represented graphically, as in Figure 6.10.36 This is a powerful argument in favor of GWT, so it is no wonder that it figures prominently in IPCC assessment reports and insightful documentaries, such as Al Gore’s movie An Inconvenient Truth (Guggenheim 2006).

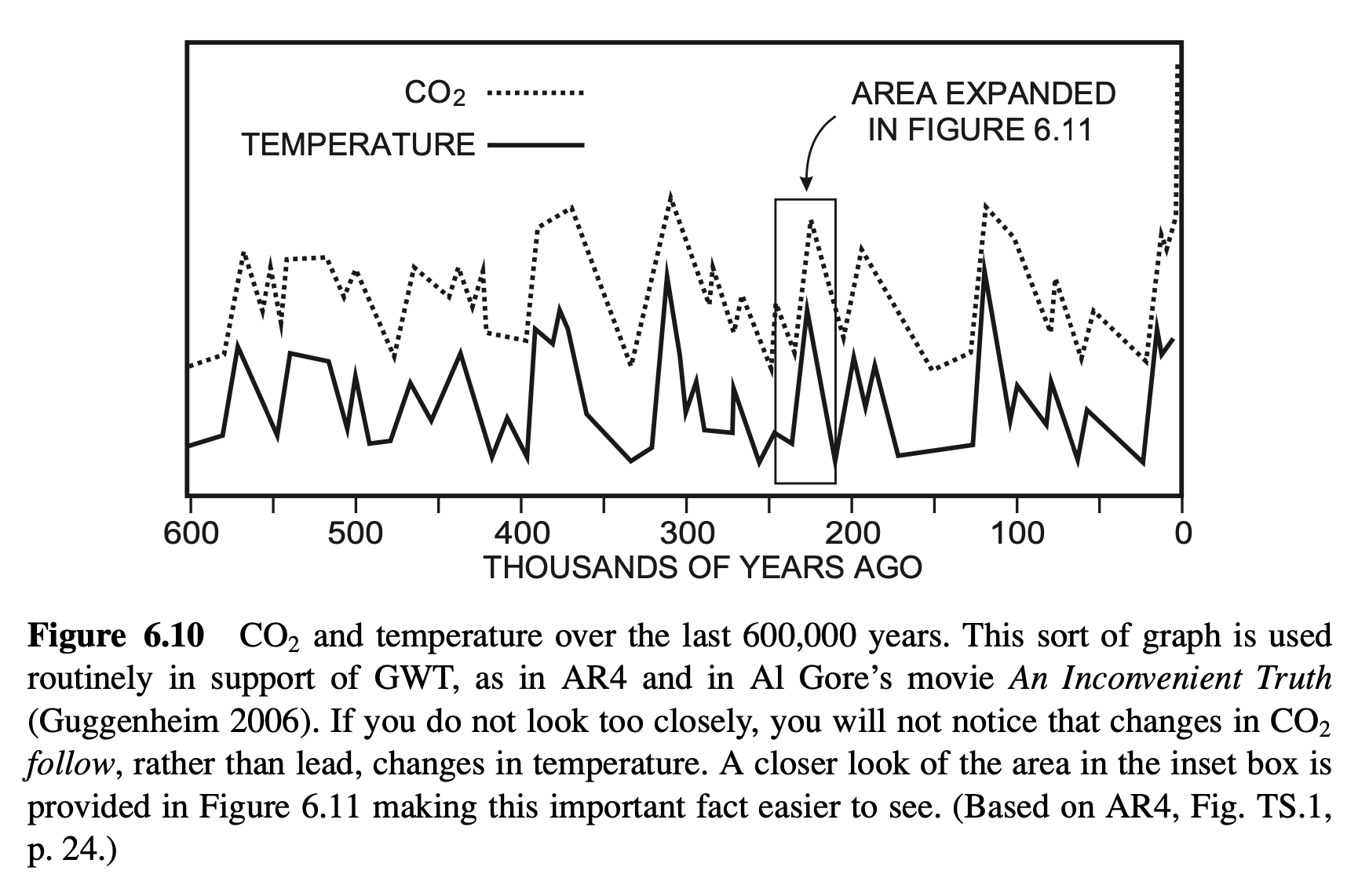

No 4: CO₂ Changes Caused by Temperature Changes. Part of what makes the graph persuasive is its scale: It covers an amazing span of 600,000 years. The scale also makes it impossible to notice that changes in CO₂ follow changes in temperature rather than preceding. Numerous scientific studies using various techniques have shown that when temperature falls or rises, CO₂ falls or rises about 800 years later on average,37 as shown in Figure 6.11.38 This is important because a cause cannot follow changes in temperature. Contrary to the general understanding of GWT, CO₂ is not a climate “driver” that causes major climate changes. Does that mean that GWT is disconfirmed by the CO₂ evidence? No, for GWT can fall back on the claim that CO₂ is merely a climate “enhancer” that amplifies cooling or warming by positive feedback—which, indeed, is the current stance of the IPCC (AR4, pp. 54–57, 85).

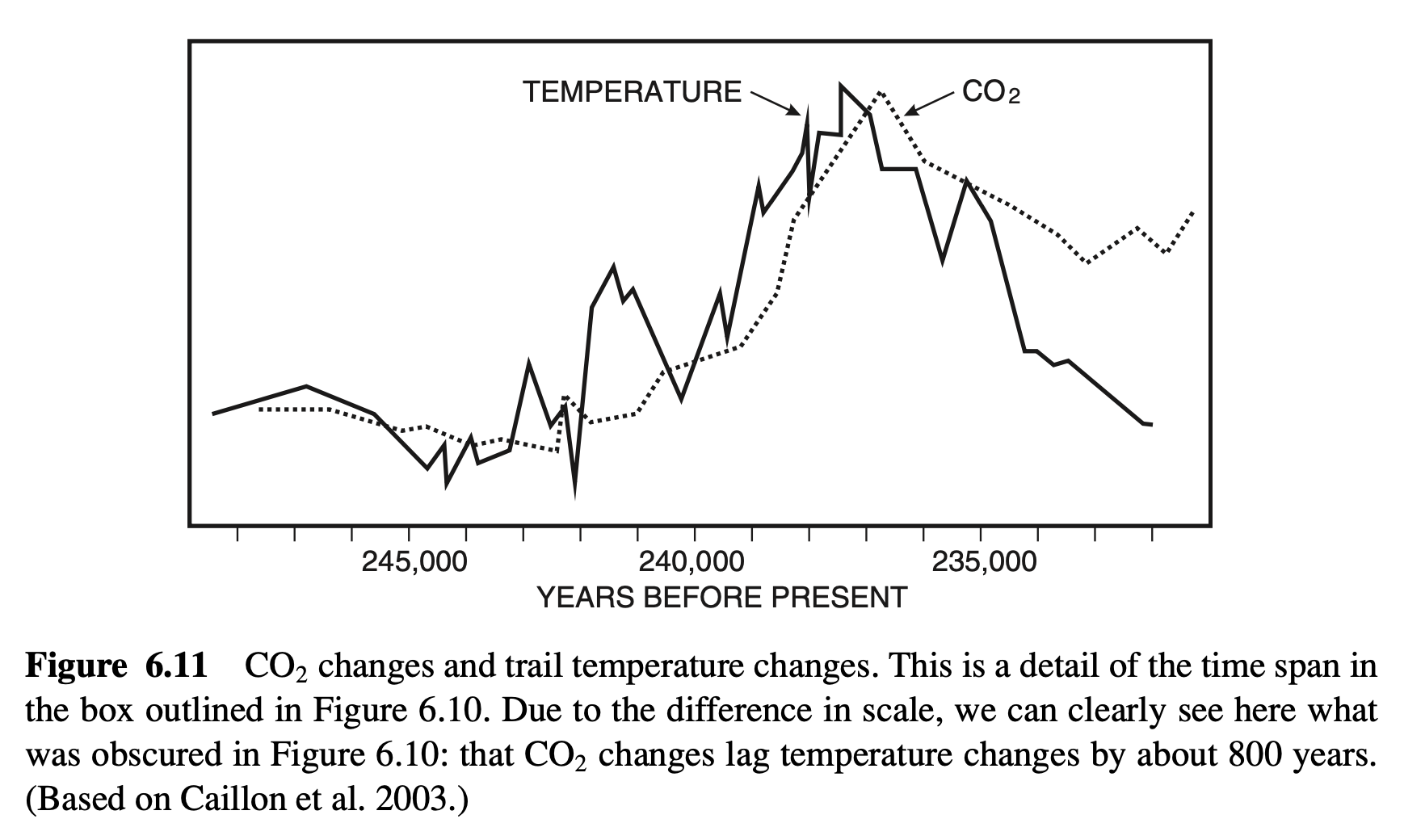

From a logical point of view, however, this changes everything. The hypothesis that CO₂ is a climate driver—that is, that changes in CO₂ cause changes in temperature— had the benefit of simplicity. Data of CO₂ changes consistently followed by temperature changes would nicely support this simple hypothesis, as shown in Figure 6.12A.

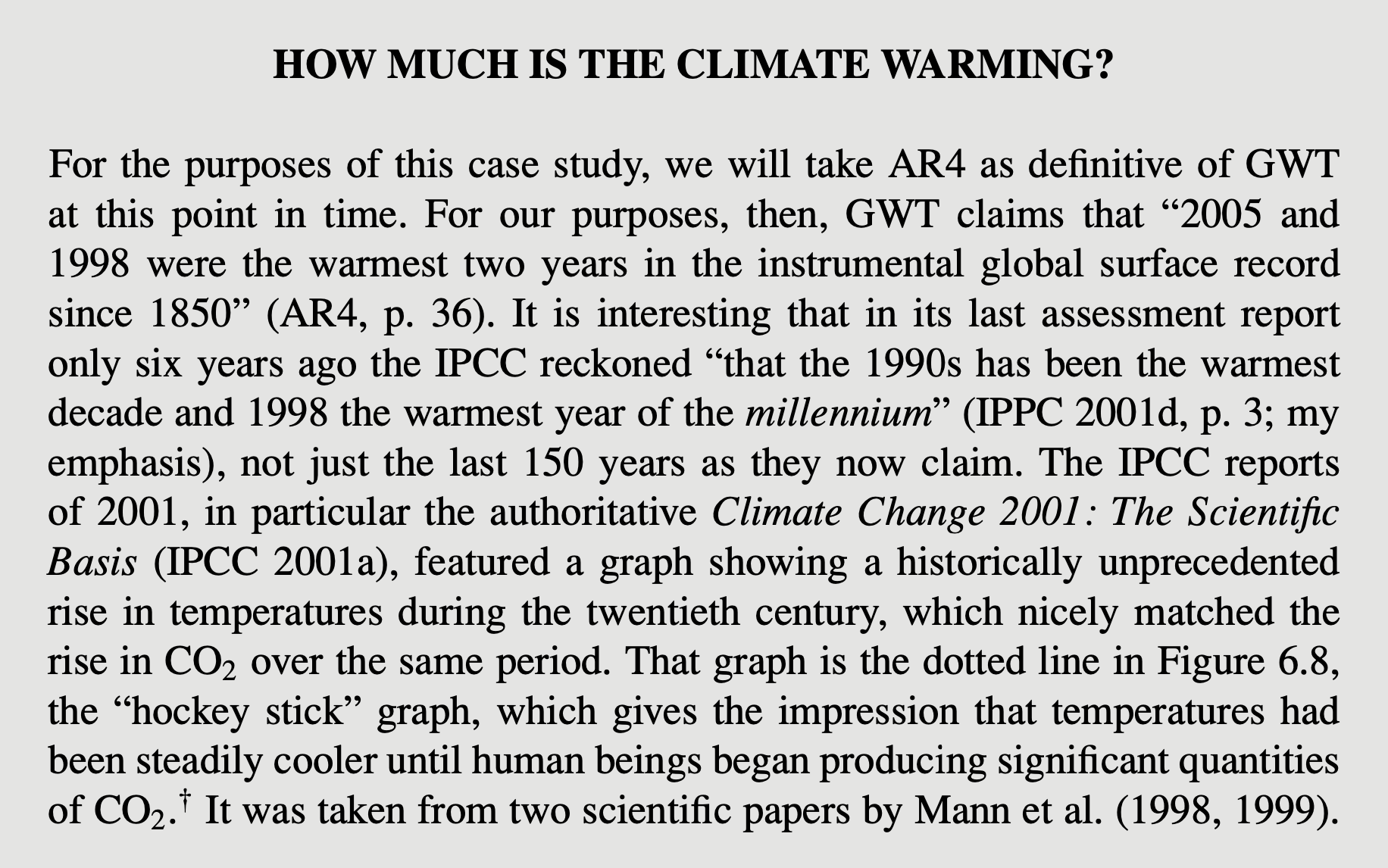

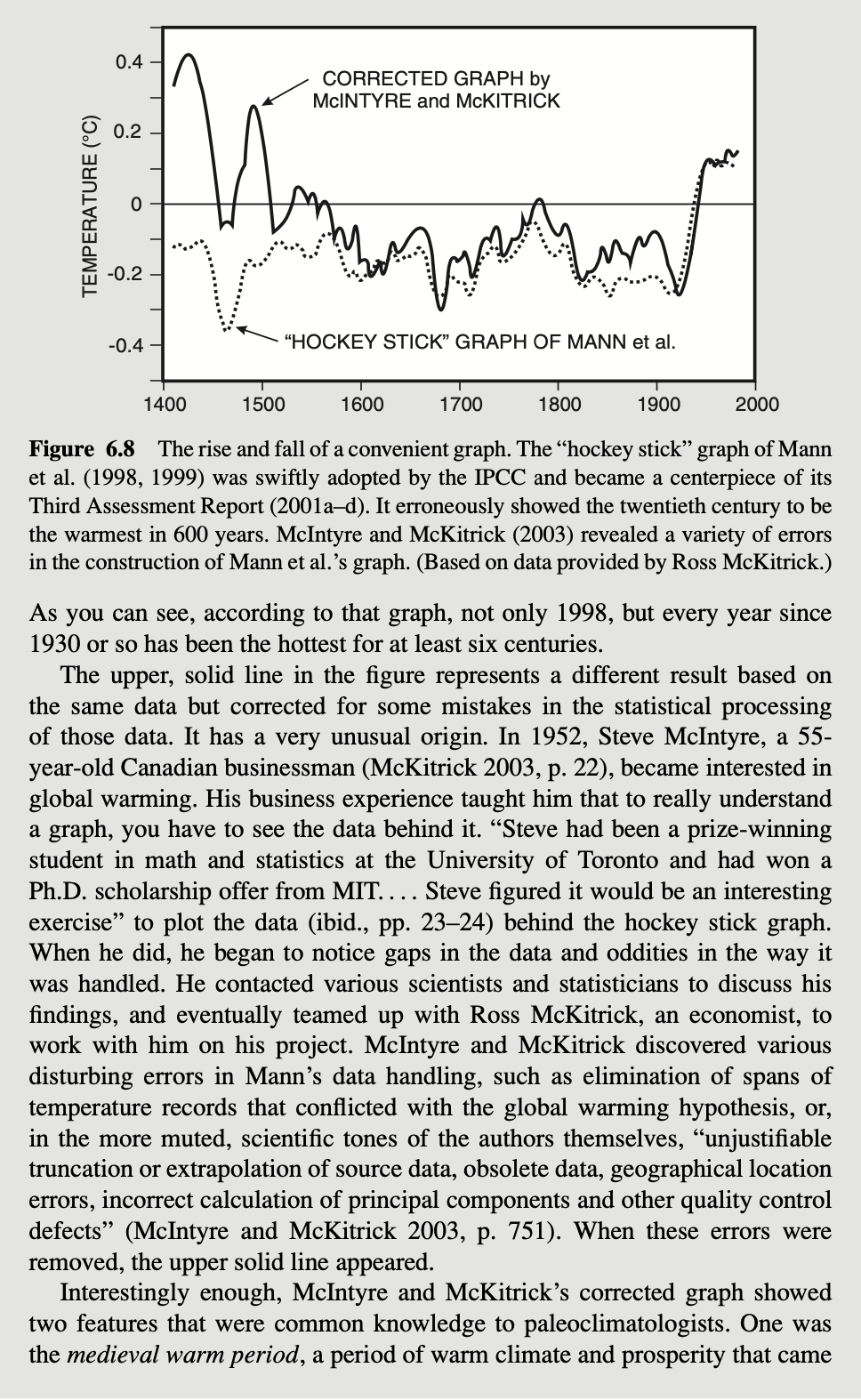

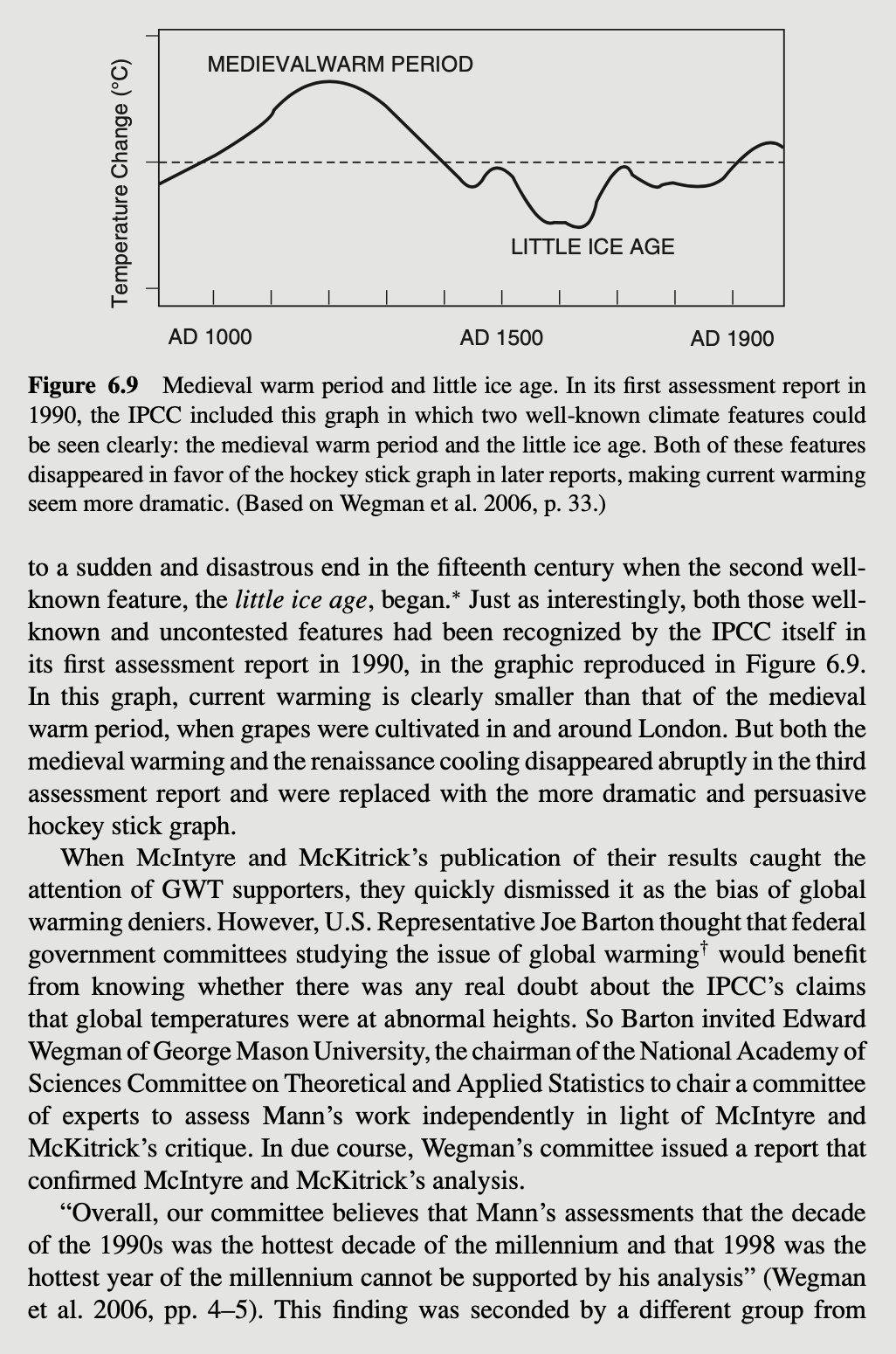

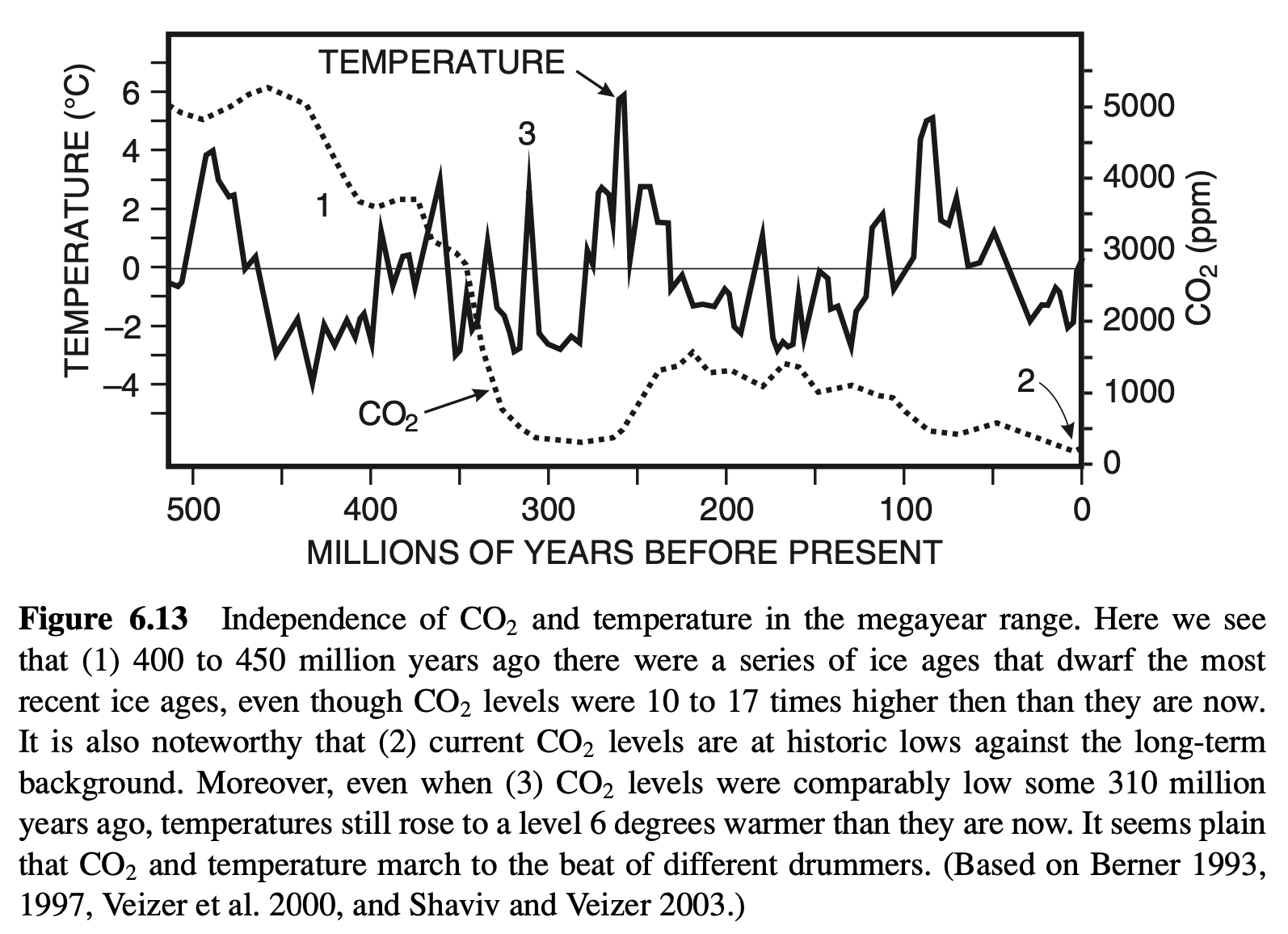

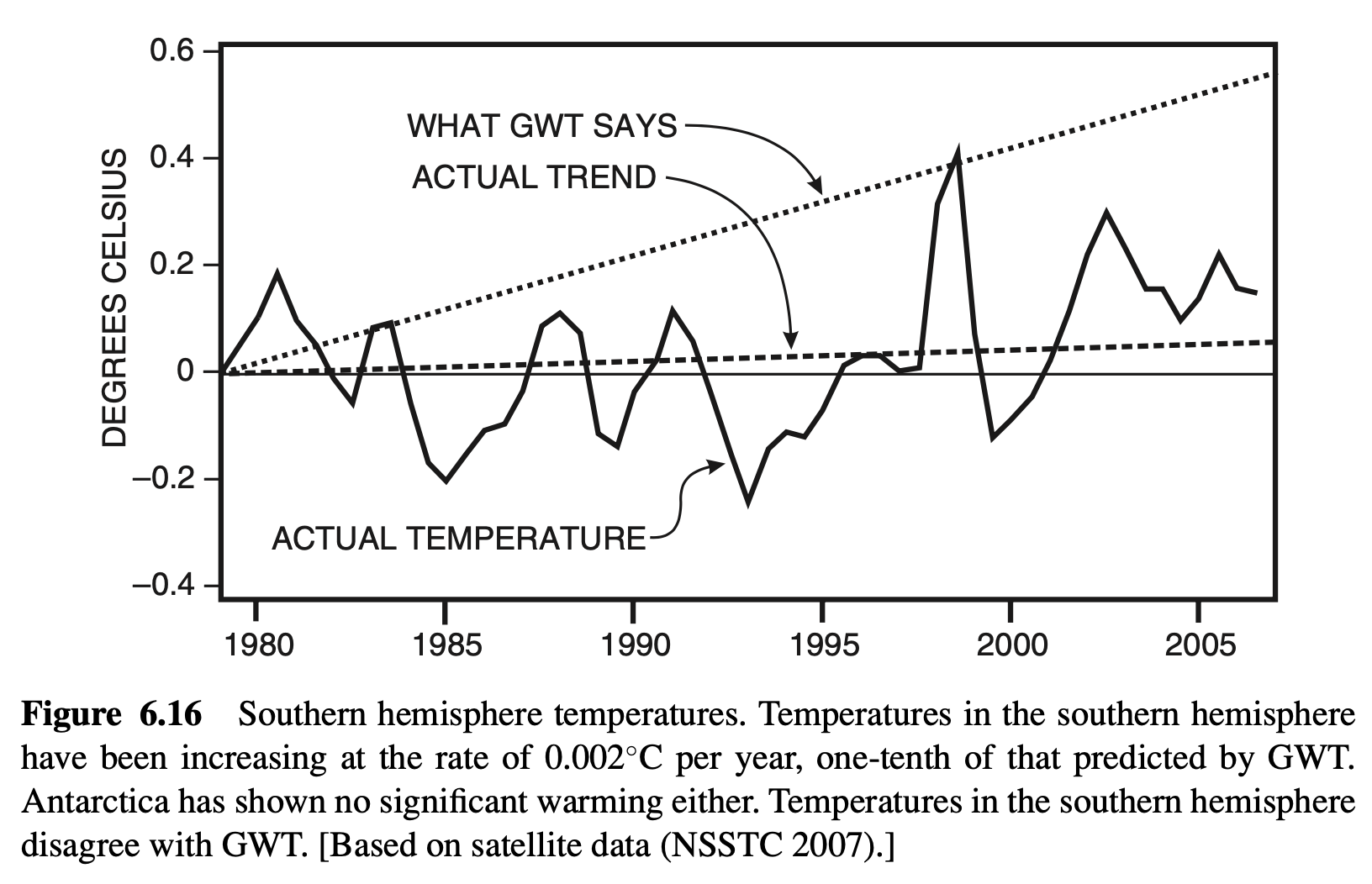

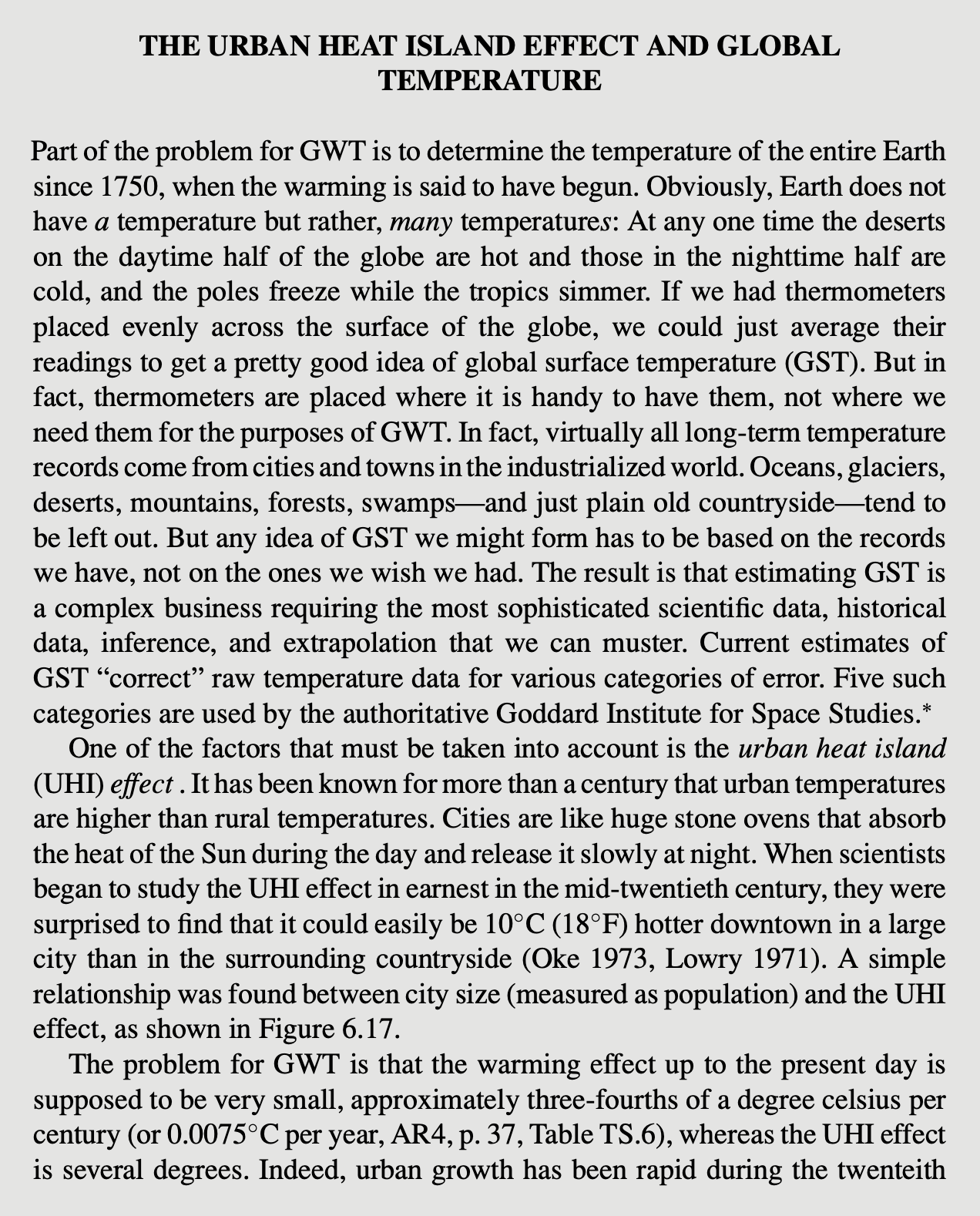

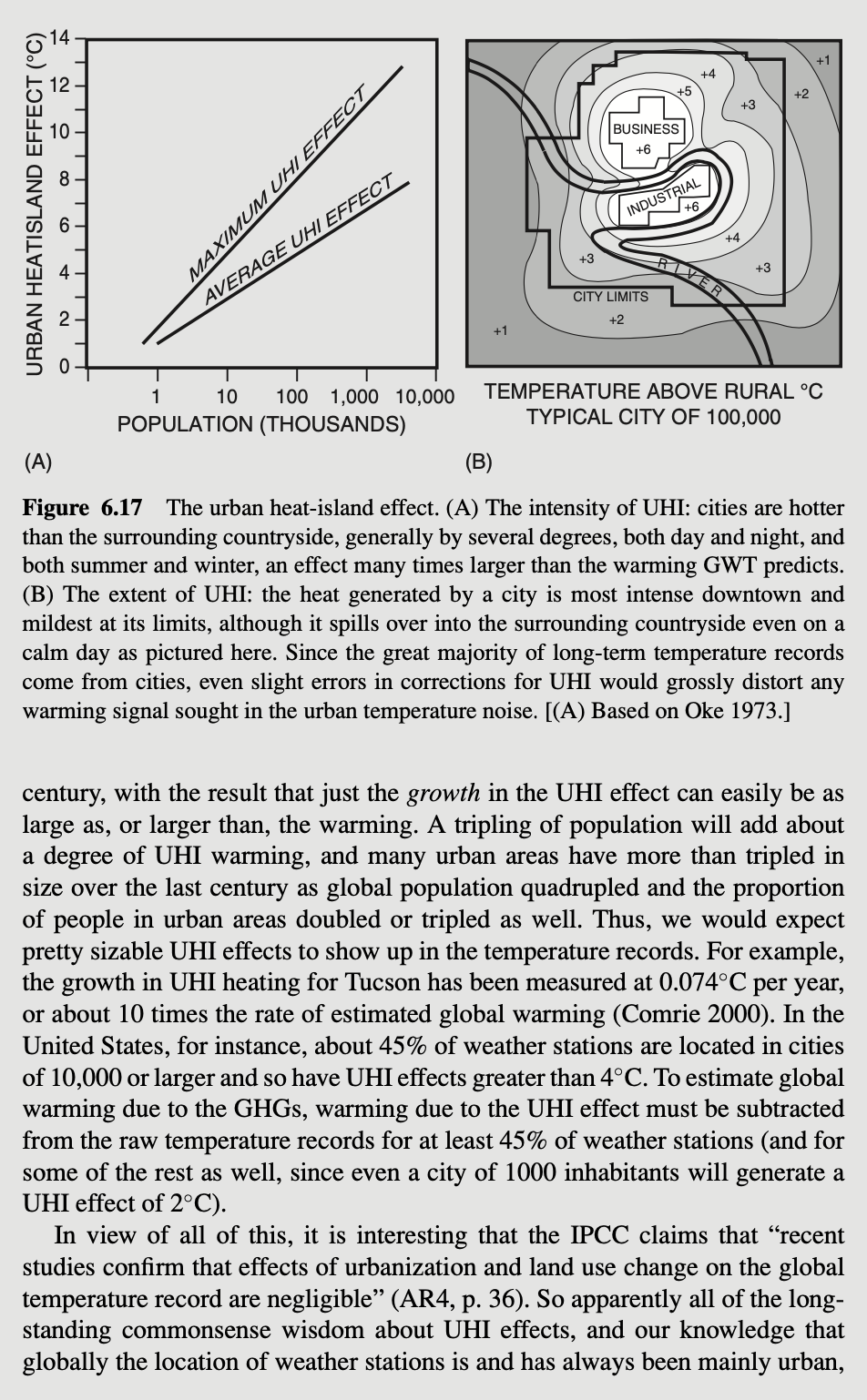

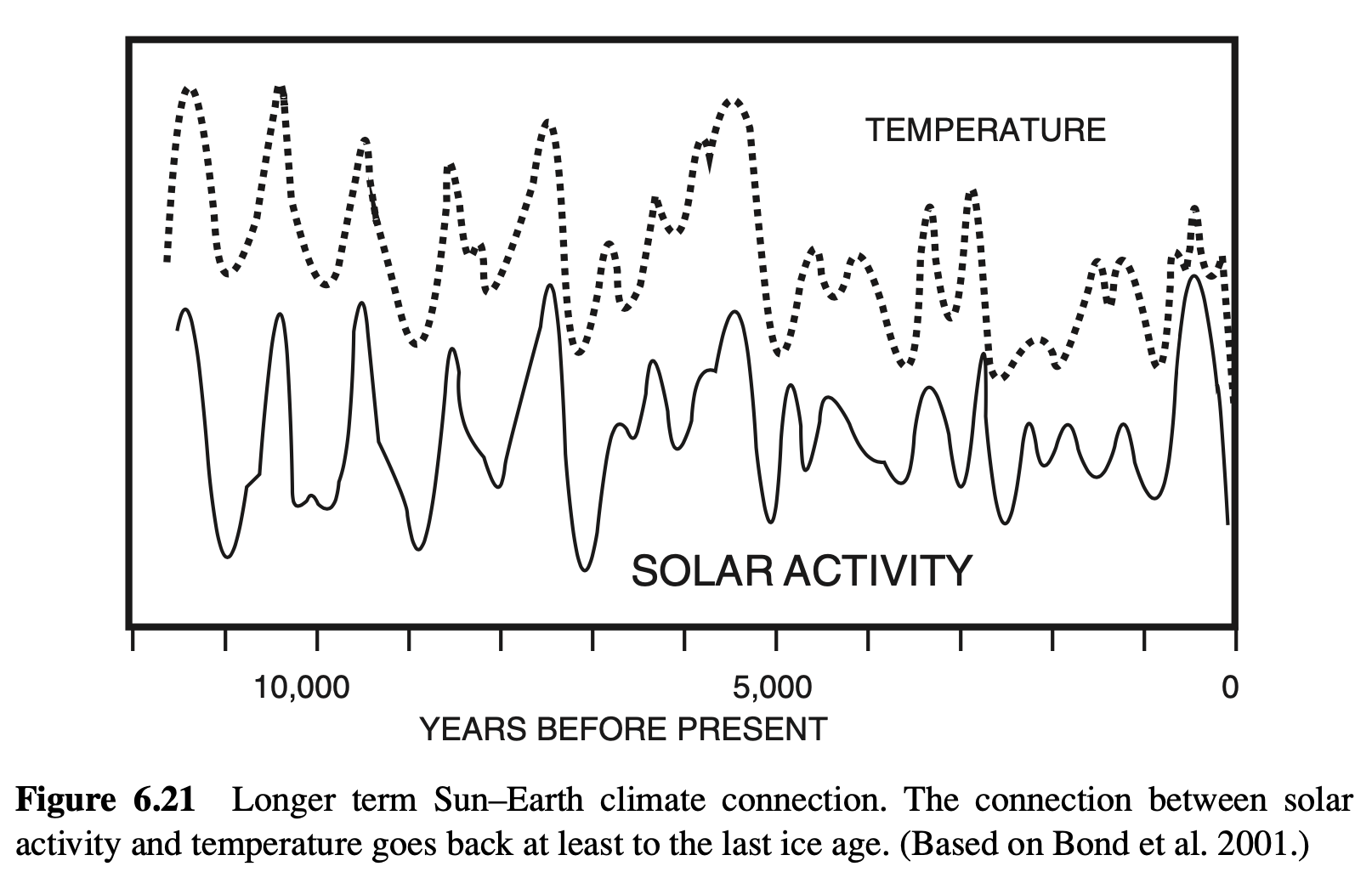

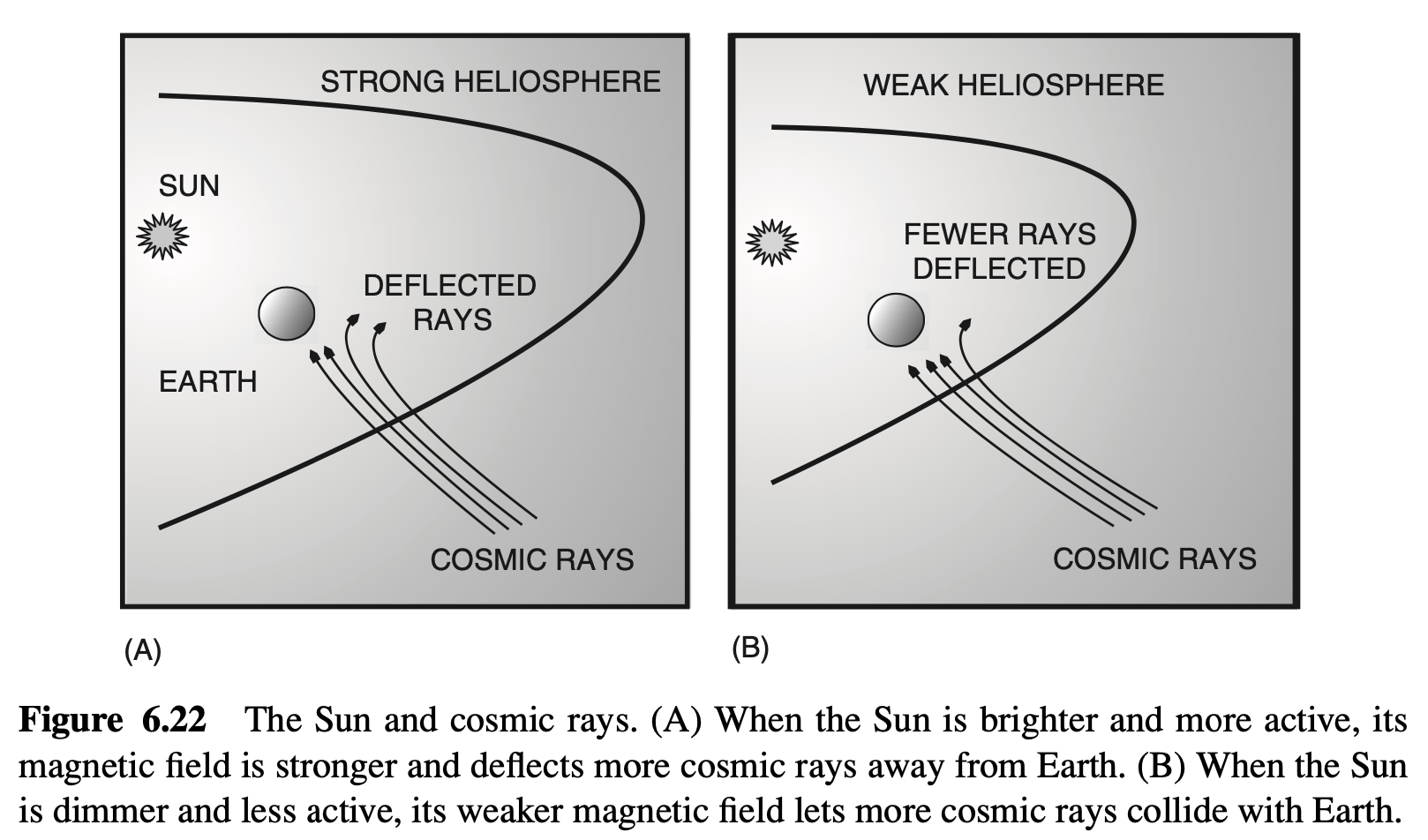

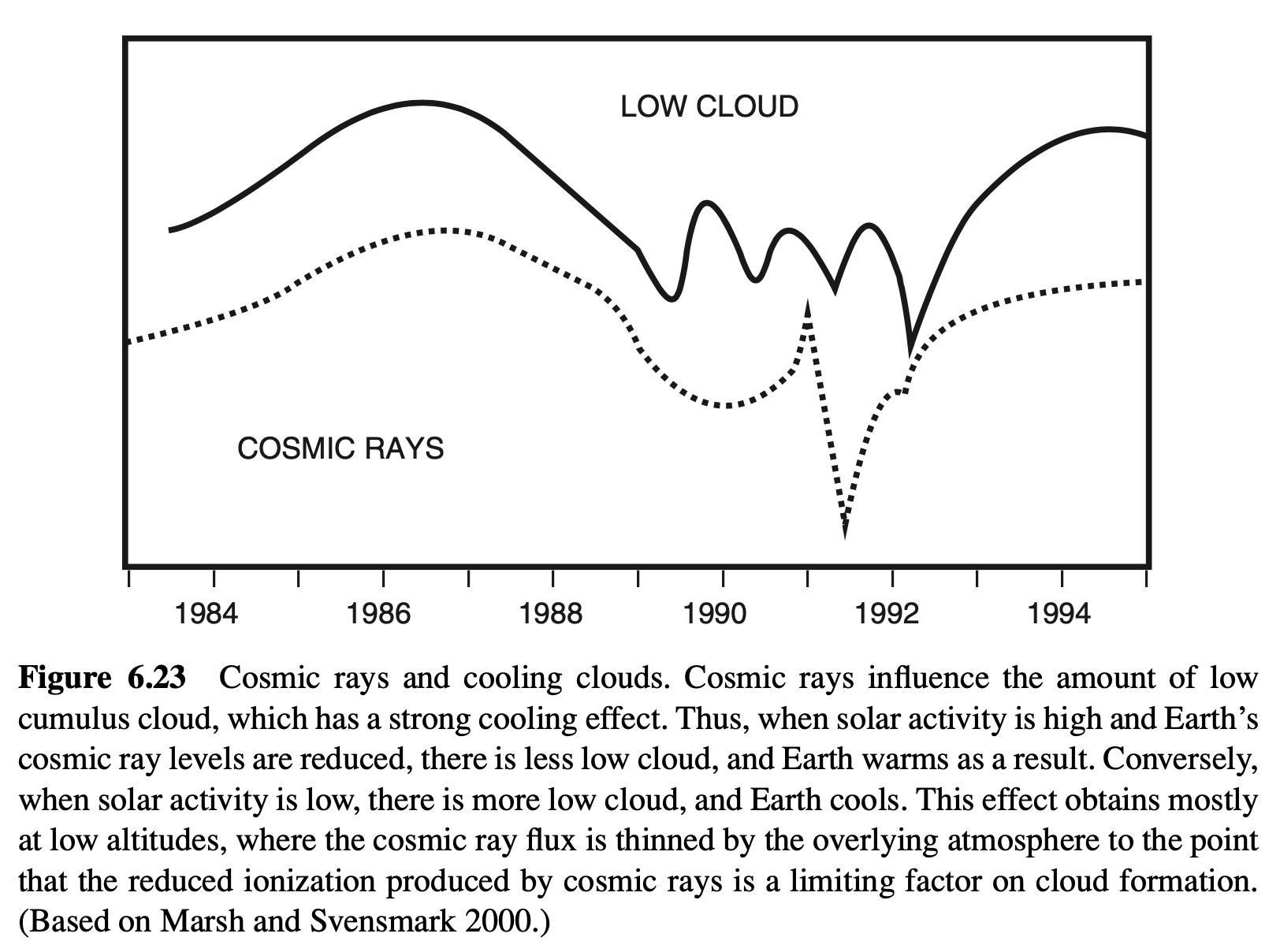

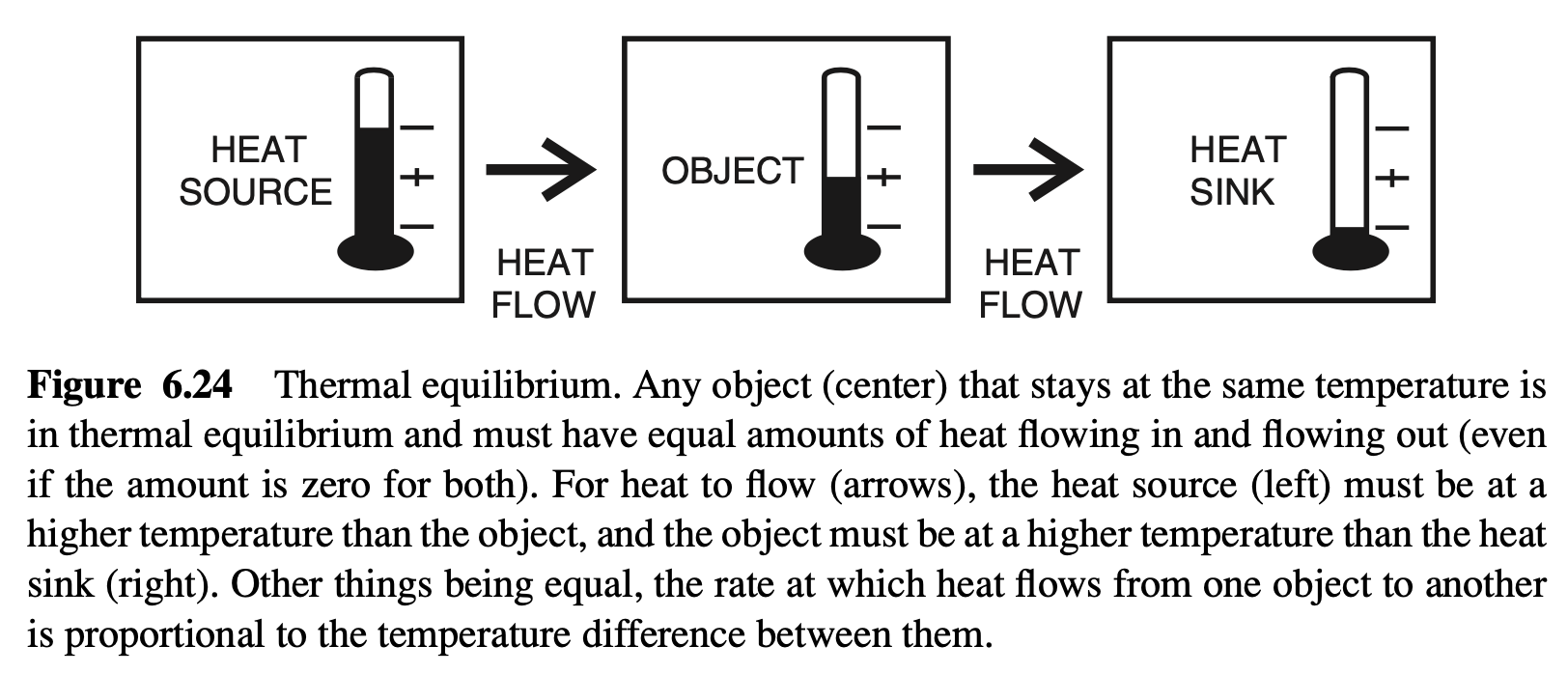

Graphics like the one used by Gore and the IPCC give the impression that the data support a causal linkage from CO₂ changes to temperature changes, as illustrated in Figure 6.12A. But since the data show the reverse sequence, both critics and supporters of GWT now think that temperature change does cause CO₂ change, as in Figure 6.12B.39 GWT supporters have therefore redefined their theory as follows: “Atmospheric CO₂ and temperature in Antarctica co-varied over the past 650,000 years. Available data suggests that CO₂ acts as an amplifying feedback” (AR4, p. 57).